8i | 9i | 10g | 11g | 12c | 13c | 18c | 19c | 21c | 23c | Misc | PL/SQL | SQL | RAC | WebLogic | Linux

Oracle 11g Release 1 RAC On RHEL5 Linux Using VMware ESX Server and NFS

This article describes the installation of Oracle 11g release 1 (11.1 32-bit) RAC on Red Hat Enterprise Linux 5 (32-bit) using VMware ESX Server and NFS for shared storage.

- Introduction

- Download Software

- Virtual Machine Setup

- Guest Operating System Installation

- Oracle Installation Prerequisites

- Create Shared Disks

- Install the Clusterware Software

- Install the Database Software

- Create a Database using the DBCA

- TNS Configuration

- Check the Status of the RAC

- Direct NFS Client

Introduction

ESX Server is the enterprise level hypervisor from VMware, which installs on the "bare-metal", making it significantly more efficient than desktop virtualization tools. This article uses EXS Server to provide the infrastructure for an Oracle Real Application Clusters installation.

The article assumes you already have a VMware ESX Server and a VMware Infrastructure Client installation. The installation of these products is described here.

Download Software

Download the following software.

Virtual Machine Setup

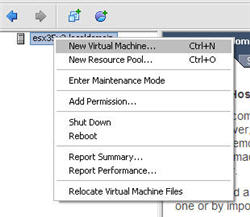

Right-click on the ESX server in the left-hand pane and select the "New Virtual Machine..." menu option.

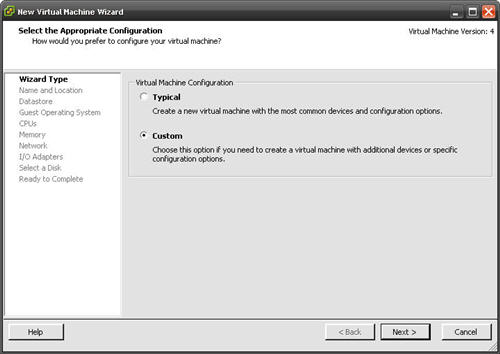

Select the custom option and click the "Next" button.

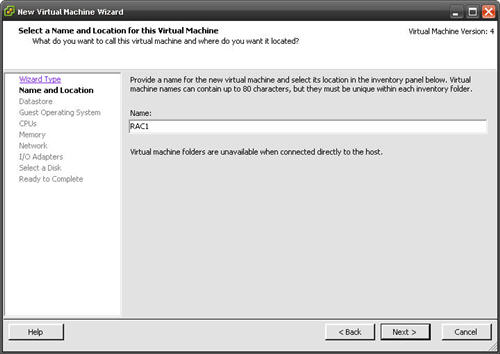

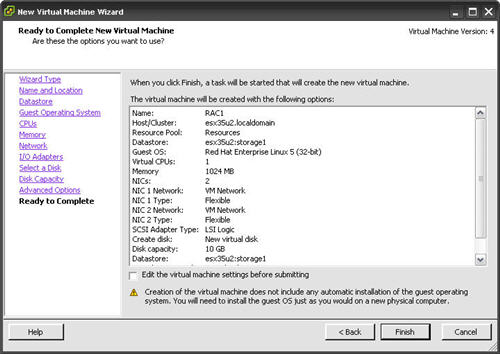

Enter the virtual machine name (RAC1) as you would like it to appear in the right-hand pane of the client, then click the "Next" button.

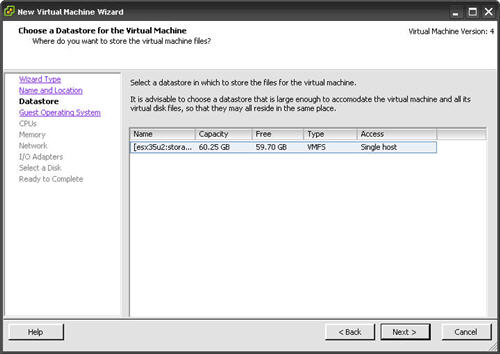

Select the default datastore by clicking the "Next" button.

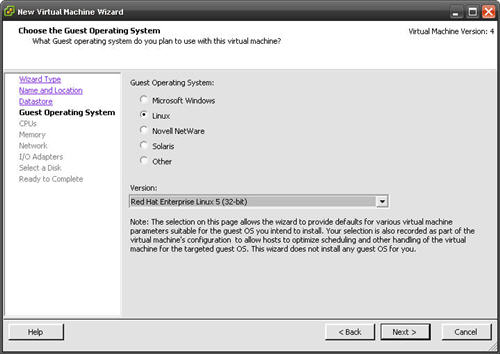

Select the "Linux" and "Red Hat Enterprise Linux 5" options, then click the "Next" button.

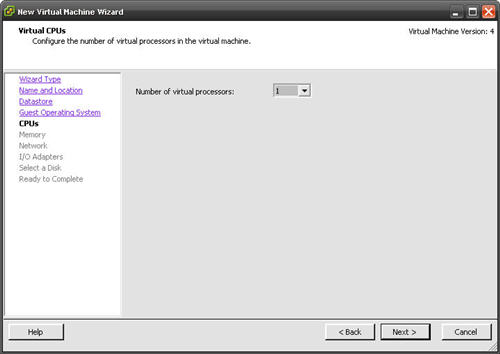

Select the required number of CPUs for the virtual machine, then click the "Next" button.

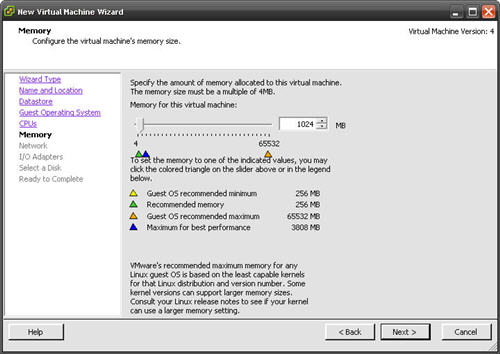

For this proof of concept we can complete the installation with 1G of memory. Enter the required amount of memory for the virtual machines, then click the "Next" button.

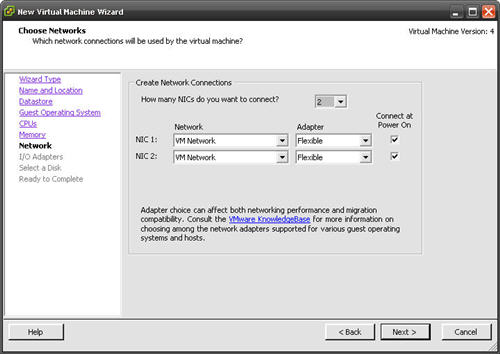

We need at least two network cards. One for the public IP and virtual IP addresses and a separate one for the private IP address. Select the number and type of networks cards required, then click the "Next" button.

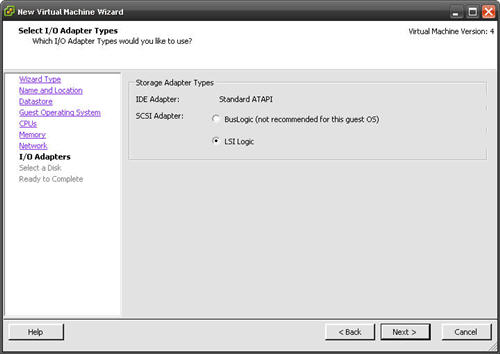

Accept the default storage adapter by clicking the "Next" button.

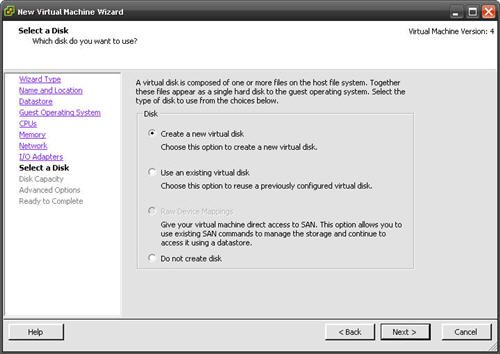

Accept the "Create a new virtual disk" option by clicking the "Next" button.

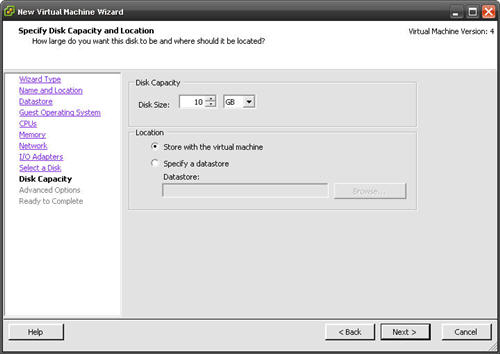

We are using NFS store the shared Oracle homes and the database files, so we don't need much disk space on each virtual machine. Assuming you are using 1G of memory, you will be defining 2G of swap, so 10G of disk space is plenty. If you are using more memory, you will need to increase the disk space accordingly. Enter the appropriate disk capacity, then click the "Next" button.

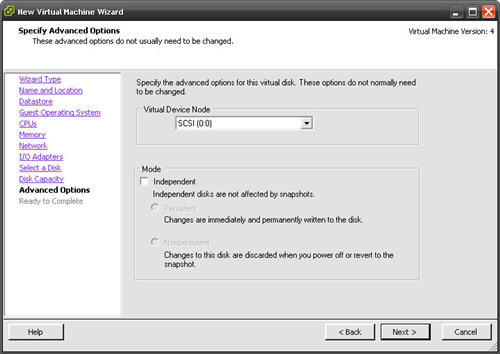

The local disks do not need to be sharable, so click the "Next" button to ignore the advanced options.

If you are happy with the summary information, click the "Finish" button.

The virtual machine is now visible in the left-hand pane.

Repeat the process to define the second RAC node (RAC2).

To start the virtual machine, click the play button on the toolbar.

The virtual machine will start and attempt to boot from any installable media, or the network.

Guest Operating System Installation

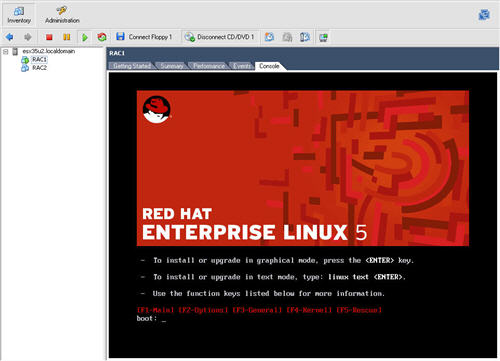

Place the RHEL 5 DVD into the client PC's DVD drive and start the virtual machine by clicking the play button on the toolbar. The right pane of the VMware ESX Server client should display a boot loader, then the RHEL 5 installation screen.

Continue through the RHEL5 installation as you would for a normal server. A general pictorial guide to the installation can be found here. More specifically, it should be a server installation with a minimum of 2G swap, firewall and SELinux disabled and the following package groups installed:

- GNOME Desktop Environment

- Editors

- Graphical Internet

- Text-based Internet

- Development Libraries

- Development Tools

- Server Configuration Tools

- Administration Tools

- Base

- System Tools

- X Window System

To be consistent with the rest of the article, the following information should be set during the installation.

RAC1.

- hostname: rac1.localdomain

- IP Address eth0: 10.1.10.201 (public address)

- Default Gateway eth0: 10.1.10.1 (public address)

- IP Address eth1: 10.1.9.201 (private address)

- Default Gateway eth1: none

RAC2.

- hostname: rac2.localdomain

- IP Address eth0: 10.1.10.202 (public address)

- Default Gateway eth0: 10.1.10.1 (public address)

- IP Address eth1: 10.1.9.202 (private address)

- Default Gateway eth1: none

You are free to change the IP addresses to suit your network, but remember to stay consistent with those adjustments throughout the rest of the article.

Once the basic installation is complete, you must install some additional packages whilst logged in as the root user. If you have an internet connection you can download an install them using the following command.

yum install binutils elfutils-libelf glibc glibc-common libaio \

libgcc libstdc++ make compat-libstdc++-33 elfutils-libelf-devel \

glibc-headers glibc-devel libgomp gcc gcc-c++ libaio-devel \

libstdc++-devel unixODBC unixODBC-devel sysstat

Alternatively, you can install them from the RHEL5 DVD.

# From Enterprise Linux 5.2 DVD cd /media/dvd/Server rpm -Uvh binutils-2.* rpm -Uvh elfutils-libelf-0.* rpm -Uvh glibc-2.* rpm -Uvh glibc-common-2.* rpm -Uvh libaio-0.* rpm -Uvh libgcc-4.* rpm -Uvh libstdc++-4.* rpm -Uvh make-3.* rpm -Uvh compat-libstdc++-33* rpm -Uvh elfutils-libelf-devel-* rpm -Uvh glibc-headers* rpm -Uvh glibc-devel-2.* rpm -Uvh libgomp* rpm -Uvh gcc-4.* rpm -Uvh gcc-c++-4.* rpm -Uvh libaio-devel-0.* rpm -Uvh libstdc++-devel-4.* rpm -Uvh unixODBC-2.* rpm -Uvh unixODBC-devel-2.* rpm -Uvh sysstat-7.* cd / eject

Remember to perform the VMware Tools installation as described in the VMware ESX Server Installation article.

Oracle Installation Prerequisites

Perform the following steps whilst logged into the RAC1 virtual machine as the root user.

The "/etc/hosts" file must contain the following information.

127.0.0.1 localhost.localdomain localhost # Public 10.1.10.201 rac1.localdomain rac1 10.1.10.202 rac2.localdomain rac2 #Private 10.1.9.201 rac1-priv.localdomain rac1-priv 10.1.9.202 rac2-priv.localdomain rac2-priv #Virtual 10.1.10.203 rac1-vip.localdomain rac1-vip 10.1.10.204 rac2-vip.localdomain rac2-vip #NAS 10.1.10.61 nas1.localdomain nas1

Add the following lines to the "/etc/sysctl.conf" file.

kernel.shmmni = 4096 # semaphores: semmsl, semmns, semopm, semmni kernel.sem = 250 32000 100 128 net.ipv4.ip_local_port_range = 1024 65000 net.core.rmem_default=4194304 net.core.rmem_max=4194304 net.core.wmem_default=262144 net.core.wmem_max=262144 # Additional and amended parameters suggested by Kevin Closson #net.core.rmem_default = 524288 #net.core.wmem_default = 524288 #net.core.rmem_max = 16777216 #net.core.wmem_max = 16777216 net.ipv4.ipfrag_high_thresh=524288 net.ipv4.ipfrag_low_thresh=393216 net.ipv4.tcp_rmem=4096 524288 16777216 net.ipv4.tcp_wmem=4096 524288 16777216 net.ipv4.tcp_timestamps=0 net.ipv4.tcp_sack=0 net.ipv4.tcp_window_scaling=1 net.core.optmem_max=524287 net.core.netdev_max_backlog=2500 sunrpc.tcp_slot_table_entries=128 sunrpc.udp_slot_table_entries=128 net.ipv4.tcp_mem=16384 16384 16384

Run the following command to change the current kernel parameters.

/sbin/sysctl -p

Add the following lines to the "/etc/security/limits.conf" file.

oracle soft nproc 2047 oracle hard nproc 16384 oracle soft nofile 1024 oracle hard nofile 65536

Add the following lines to the "/etc/pam.d/login" file, if it does not already exist.

session required pam_limits.so

Disable secure linux by editing the "/etc/selinux/config" file, making sure the SELINUX flag is set as follows.

SELINUX=disabled

Alternatively, this alteration can be done using the GUI tool (System > Administration > Security Level and Firewall). Click on the SELinux tab and disable the feature.

Create the new groups and users.

groupadd oinstall groupadd dba groupadd oper groupadd asmadmin useradd -u 500 -g oinstall -G dba,oper,asmadmin oracle passwd oracle

Configure SSH on each node in the cluster. Log in as the "oracle" user and perform the following tasks on each node.

su - oracle mkdir ~/.ssh chmod 700 ~/.ssh /usr/bin/ssh-keygen -t rsa # Accept the default settings. exit

The RSA public key is written to the "~/.ssh/id_rsa.pub" file and the private key to the "~/.ssh/id_rsa" file.

Log in as the "oracle" user on RAC1, generate an "authorized_keys" file on RAC1 and copy it to RAC2 using the following commands.

su - oracle cd ~/.ssh cat id_rsa.pub >> authorized_keys scp authorized_keys rac2:/home/oracle/.ssh/ exit

Next, log in as the "oracle" user on RAC2 and perform the following commands.

su - oracle cd ~/.ssh cat id_rsa.pub >> authorized_keys scp authorized_keys rac1:/home/oracle/.ssh/ exit

The "authorized_keys" file on both servers now contains the public keys generated on all RAC nodes.

To enable SSH user equivalency on the cluster member nodes issue the following commands on each node.

su - oracle ssh rac1 date ssh rac2 date ssh rac1.localdomain date ssh rac2.localdomain date exec /usr/bin/ssh-agent $SHELL /usr/bin/ssh-add

You should now be able to SSH and SCP between servers without entering passwords.

Login as the oracle user and add the following lines at the end of the ".bash_profile" file.

# Oracle Settings

TMP=/tmp; export TMP

TMPDIR=$TMP; export TMPDIR

ORACLE_HOSTNAME=rac1.localdomain; export ORACLE_HOSTNAME

ORACLE_BASE=/u01/app/oracle; export ORACLE_BASE

ORACLE_HOME=$ORACLE_BASE/product/11.1.0/db_1; export ORACLE_HOME

ORACLE_SID=RAC1; export ORACLE_SID

ORACLE_TERM=xterm; export ORACLE_TERM

PATH=/usr/sbin:$PATH; export PATH

PATH=$ORACLE_HOME/bin:$PATH; export PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib; export LD_LIBRARY_PATH

CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib; export CLASSPATH

if [ $USER = "oracle" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

fi

Remember to set the correct values for the ORACLE_SID and ORACLE_HOSTNAME on the second node.

Create Shared Disks

This installation uses NFS to provide the shared storage for RAC. Amend the following instructions to suit the paths used by your NAS or NFS server.

If you are using a third Linux server as your NFS server, you will need to create some NFS shares as described below.

mkdir /shared_config mkdir /shared_crs mkdir /shared_home mkdir /shared_data

Add the following lines to the "/etc/exports" file.

/shared_config *(rw,sync,no_wdelay,insecure_locks,no_root_squash) /shared_crs *(rw,sync,no_wdelay,insecure_locks,no_root_squash) /shared_home *(rw,sync,no_wdelay,insecure_locks,no_root_squash) /shared_data *(rw,sync,no_wdelay,insecure_locks,no_root_squash)

Run the following command to export the NFS shares.

chkconfig nfs on service nfs restart

If you are using a NAS or some other storage applicance that supports NFS, make four equivalent shares.

On both RAC1 and RAC2 create the directories in which the Oracle software will be installed.

mkdir -p /u01/app/crs/product/11.1.0/crs mkdir -p /u01/app/oracle/product/11.1.0/db_1 mkdir -p /u01/oradata mkdir -p /u01/shared_config chown -R oracle:oinstall /u01/app /u01/app/oracle /u01/oradata /u01/shared_config chmod -R 775 /u01/app /u01/app/oracle /u01/oradata /u01/shared_config

Add the following lines to the "/etc/fstab" file on each server. The mount options are based on Oracle recommendations from Metalink Note:359515.1.

nas1:/shared_config /u01/shared_config nfs rw,bg,hard,nointr,rsize=32768,wsize=32768,tcp,noac,vers=3,timeo=600 0 0 nas1:/shared_crs /u01/app/crs/product/11.1.0/crs nfs rw,bg,hard,nointr,rsize=32768,wsize=32768,tcp,vers=3,timeo=600,actimeo=0 0 0 nas1:/shared_home /u01/app/oracle/product/11.1.0/db_1 nfs rw,bg,hard,nointr,rsize=32768,wsize=32768,tcp,vers=3,timeo=600,actimeo=0 0 0 nas1:/shared_data /u01/oradata nfs rw,bg,hard,nointr,rsize=32768,wsize=32768,tcp,actimeo=0,vers=3,timeo=600 0 0

Mount the NFS shares by running the following commands as the root user on both servers.

mount /u01/shared_config mount /u01/app/crs/product/11.1.0/crs mount /u01/app/oracle/product/11.1.0/db_1 mount /u01/oradata

Create the shared CRS Configuration and Voting Disk files.

touch /u01/shared_config/ocr_configuration touch /u01/shared_config/voting_disk

Make sure the permissions on the shared directories are correct by running the following commands as the root user on each server.

chown -R oracle:oinstall /u01/shared_config chown -R oracle:oinstall /u01/app/crs/product/11.1.0/crs chown -R oracle:oinstall /u01/app/oracle/product/11.1.0/db_1 chown -R oracle:oinstall /u01/oradata

Before installing the clusterware, check the prerequisites have been met using the "runcluvfy.sh" utility in the clusterware root directory.

/mountpoint/clusterware/runcluvfy.sh stage -pre crsinst -n rac1,rac2 -verbose

If you get any failures be sure to correct them before proceeding.

Install the Clusterware Software

Unzip the clusterware and database software.

unzip linux_11gR1_clusterware.zip unzip linux_11gR1_database.zip

Login to RAC1 as the oracle user and start the Oracle installer.

cd clusterware ./runInstaller

On the "Welcome" screen, click the "Next" button.

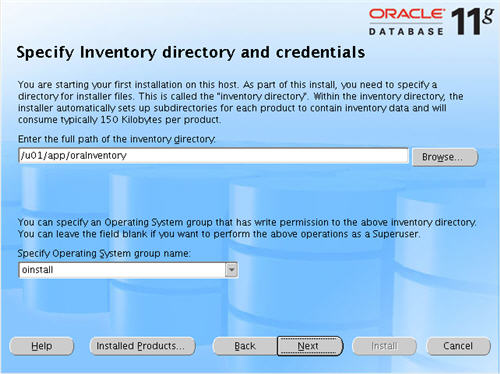

Accept the default inventory location by clicking the "Next" button.

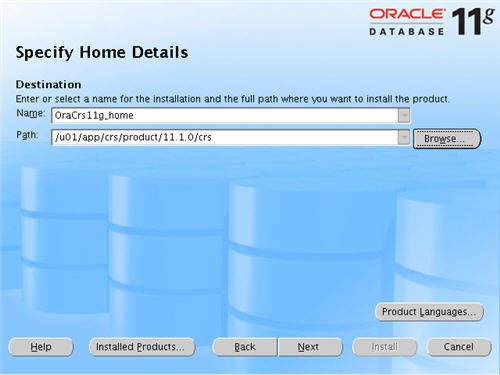

Enter "/u01/app/crs/product/11.1.0/crs" as the path for the Oracle Home and click the "Next" button.

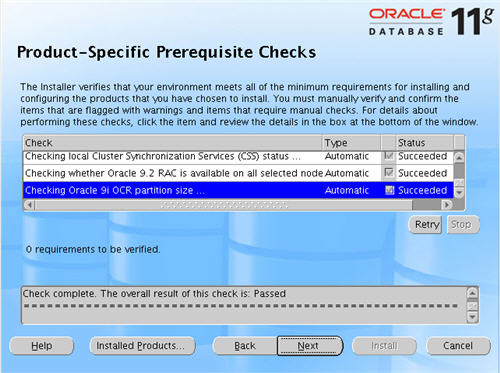

Wait while the prerequisite checks are done. If you have any failures correct them and retry the tests before clicking the "Next" button.

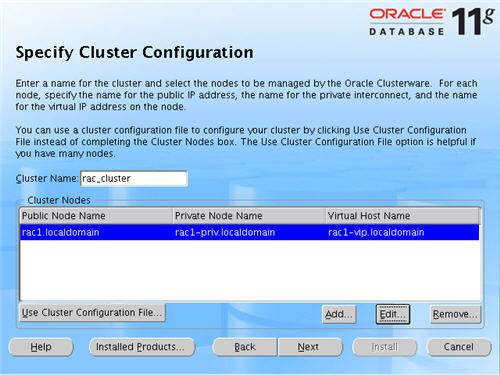

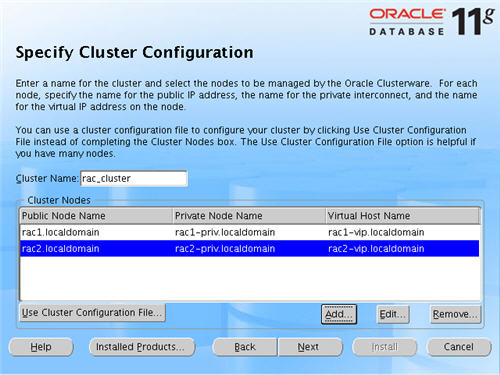

The "Specify Cluster Configuration" screen shows only the RAC1 node in the cluster. Click the "Add" button to continue.

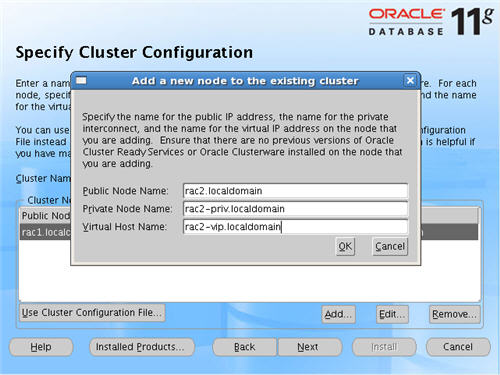

Enter the details for the RAC2 node and click the "OK" button.

Click the "Next" button to continue.

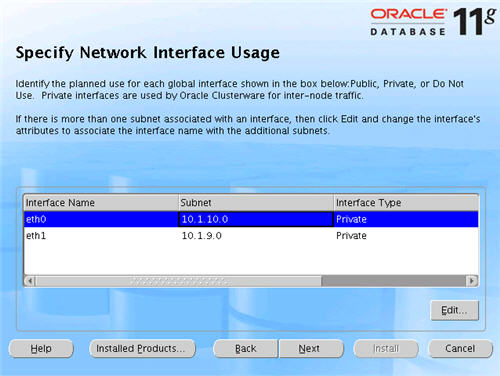

The "Specific Network Interface Usage" screen defines how each network interface will be used. Highlight the "eth0" interface and click the "Edit" button.

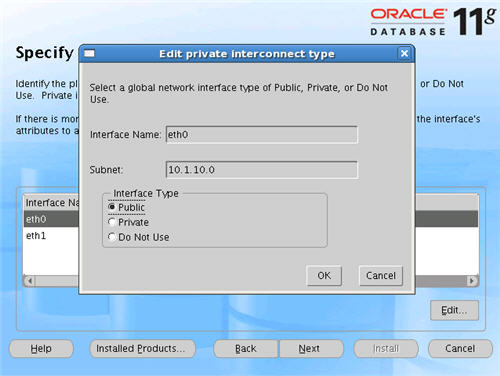

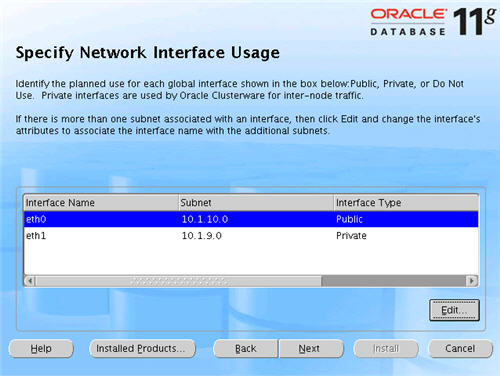

Set the "eht0" interface type to "Public" and click the "OK" button.

Leave the "eth1" interface as private and click the "Next" button.

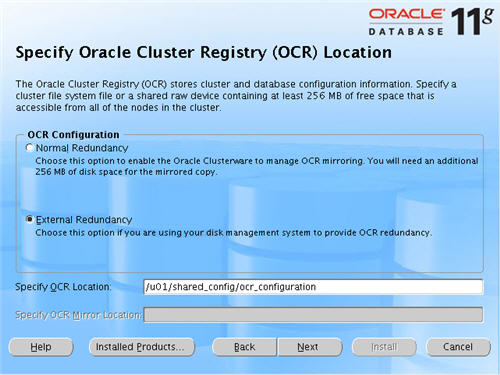

Click the "External Redundancy" option, enter "/u01/shared_config/ocr_configuration" as the OCR Location and click the "Next" button. To have greater redundancy we would need to define another shared disk for an alternate location.

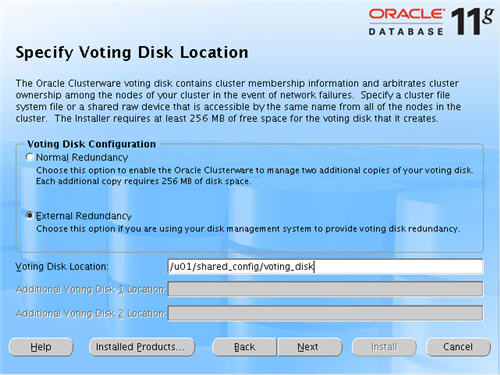

Click the "External Redundancy" option, enter "/u01/shared_config/voting_disk" as the Voting Disk Location and click the "Next" button. To have greater redundancy we would need to define another shared disk for an alternate location.

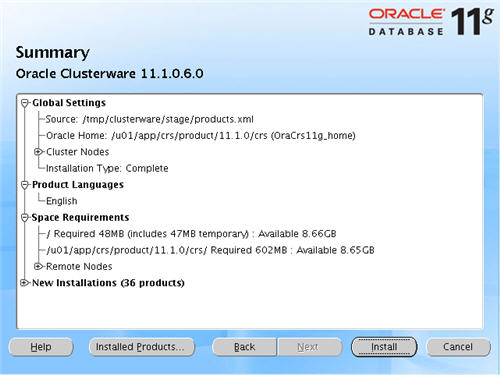

On the "Summary" screen, click the "Install" button to continue.

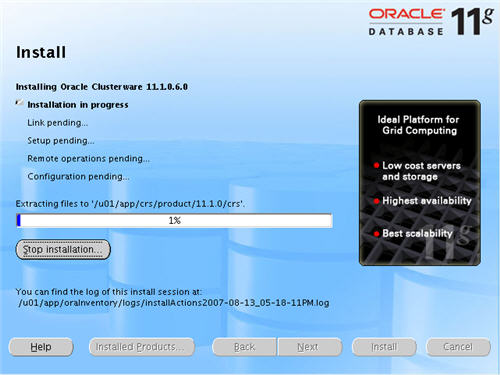

Wait while the installation takes place.

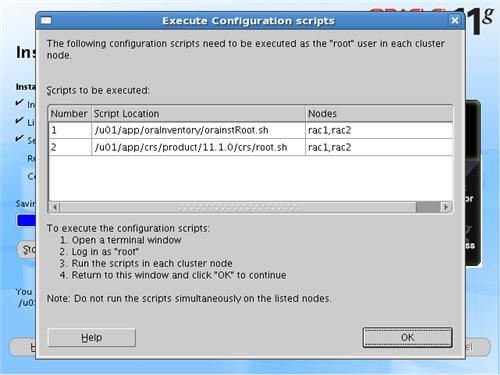

Once the install is complete, run the orainstRoot.sh and root.sh scripts on both nodes as directed on the following screen.

The output from the "orainstRoot.sh" file should look something like that listed below.

# cd /u01/app/oraInventory # ./orainstRoot.sh Changing permissions of /u01/app/oraInventory to 770. Changing groupname of /u01/app/oraInventory to oinstall. The execution of the script is complete #

The output of the "root.sh" will vary a little depending on the node it is run on. The following text is the output from the RAC1 node.

# cd /u01/app/crs/product/11.1.0/crs

# ./root.sh

WARNING: directory '/u01/app/crs/product/11.1.0' is not owned by root

WARNING: directory '/u01/app/crs/product' is not owned by root

WARNING: directory '/u01/app/crs' is not owned by root

WARNING: directory '/u01/app' is not owned by root

Checking to see if Oracle CRS stack is already configured

/etc/oracle does not exist. Creating it now.

Setting the permissions on OCR backup directory

Setting up Network socket directories

Oracle Cluster Registry configuration upgraded successfully

The directory '/u01/app/crs/product/11.1.0' is not owned by root. Changing owner to root

The directory '/u01/app/crs/product' is not owned by root. Changing owner to root

The directory '/u01/app/crs' is not owned by root. Changing owner to root

The directory '/u01/app' is not owned by root. Changing owner to root

Successfully accumulated necessary OCR keys.

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node <nodenumber>: <nodename> <private interconnect name> <hostname>

node 1: rac1 rac1-priv rac1

node 2: rac2 rac2-priv rac2

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

Now formatting voting device: /u01/shared_config/voting_disk

Format of 1 voting devices complete.

Startup will be queued to init within 30 seconds.

Adding daemons to inittab

Expecting the CRS daemons to be up within 600 seconds.

Cluster Synchronization Services is active on these nodes.

rac1

Cluster Synchronization Services is inactive on these nodes.

rac2

Local node checking complete. Run root.sh on remaining nodes to start CRS daemons.

#

The output from the RAC2 node is listed below.

# /u01/app/crs/product/11.1.0/crs

# ./root.sh

WARNING: directory '/u01/app/crs/product/11.1.0' is not owned by root

WARNING: directory '/u01/app/crs/product' is not owned by root

WARNING: directory '/u01/app/crs' is not owned by root

WARNING: directory '/u01/app' is not owned by root

Checking to see if Oracle CRS stack is already configured

/etc/oracle does not exist. Creating it now.

Setting the permissions on OCR backup directory

Setting up Network socket directories

Oracle Cluster Registry configuration upgraded successfully

The directory '/u01/app/crs/product/11.1.0' is not owned by root. Changing owner to root

The directory '/u01/app/crs/product' is not owned by root. Changing owner to root

The directory '/u01/app/crs' is not owned by root. Changing owner to root

The directory '/u01/app' is not owned by root. Changing owner to root

clscfg: EXISTING configuration version 4 detected.

clscfg: version 4 is 11 Release 1.

Successfully accumulated necessary OCR keys.

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node <nodenumber>: <nodename> <private interconnect name> <hostname>

node 1: rac1 rac1-priv rac1

node 2: rac2 rac2-priv rac2

clscfg: Arguments check out successfully.

NO KEYS WERE WRITTEN. Supply -force parameter to override.

-force is destructive and will destroy any previous cluster

configuration.

Oracle Cluster Registry for cluster has already been initialized

Startup will be queued to init within 30 seconds.

Adding daemons to inittab

Expecting the CRS daemons to be up within 600 seconds.

Cluster Synchronization Services is active on these nodes.

rac1

rac2

Cluster Synchronization Services is active on all the nodes.

Waiting for the Oracle CRSD and EVMD to start

Waiting for the Oracle CRSD and EVMD to start

Oracle CRS stack installed and running under init(1M)

Running vipca(silent) for configuring nodeapps

Creating VIP application resource on (2) nodes...

Creating GSD application resource on (2) nodes...

Creating ONS application resource on (2) nodes...

Starting VIP application resource on (2) nodes...

Starting GSD application resource on (2) nodes...

Starting ONS application resource on (2) nodes...

Done.

#

Here you can see that some of the configuration steps are omitted as they were done by the first node. In addition, the final part of the script ran the Virtual IP Configuration Assistant (VIPCA) in silent mode.

You should now return to the "Execute Configuration Scripts" screen on RAC1 and click the "OK" button.

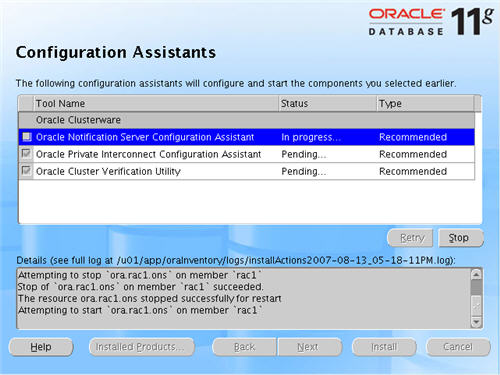

Wait for the configuration assistants to complete.

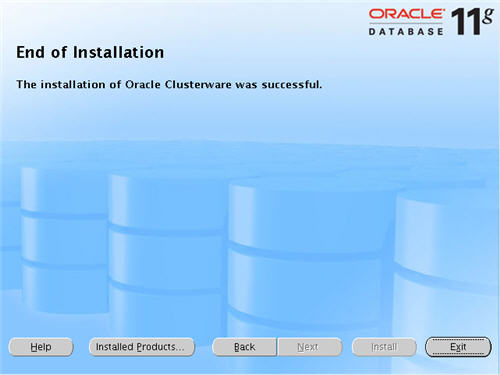

When the installation is complete, click the "Exit" button to leave the installer.

The clusterware installation is now complete.

Install the Database Software

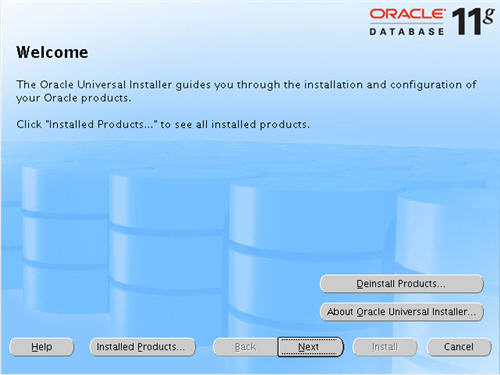

Login to RAC1 as the oracle user and start the Oracle installer.

cd database ./runInstaller

On the "Welcome" screen, click the "Next" button.

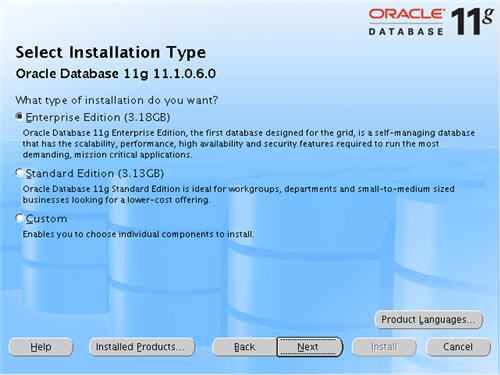

Select the "Enterprise Edition" option and click the "Next" button.

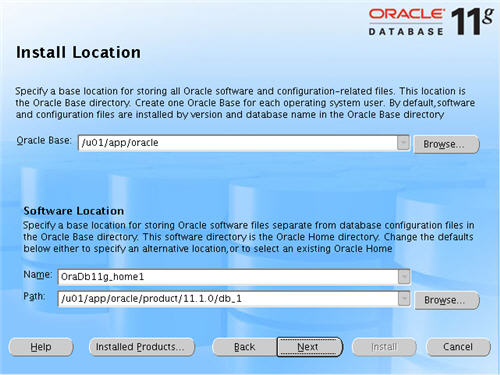

Enter the name and path for the Oracle Home and click the "Next" button.

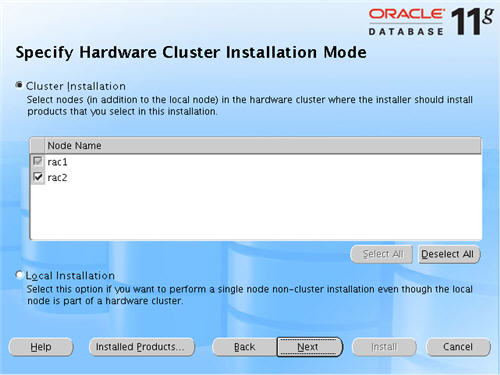

Select the "Cluster Install" option and make sure both RAC nodes are selected, the click the "Next" button.

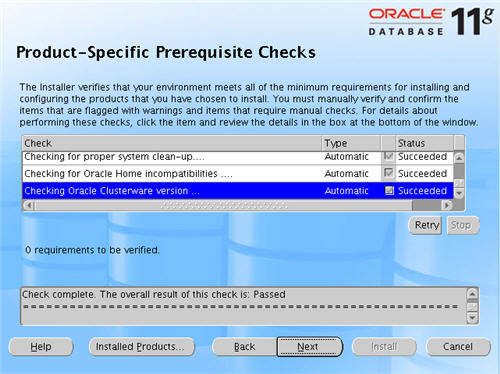

Wait while the prerequisite checks are done. If you have any failures correct them and retry the tests before clicking the "Next" button.

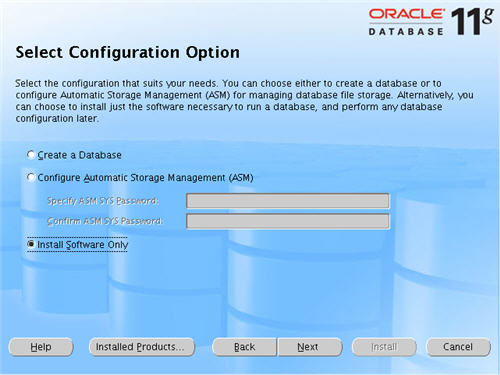

Select the "Install Software only" option, then click the "Next" button.

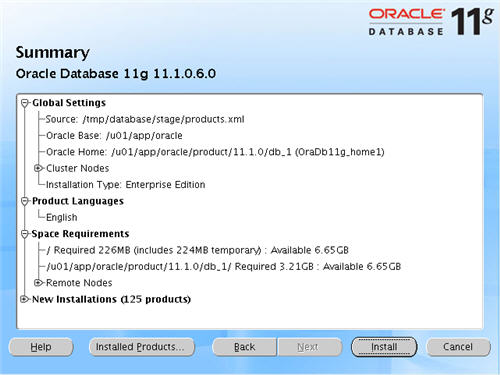

On the "Summary" screen, click the "Install" button to continue.

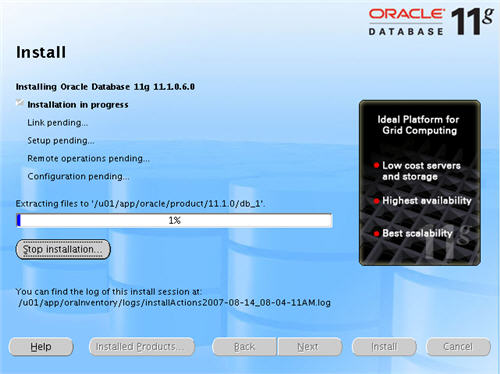

Wait while the database software installs.

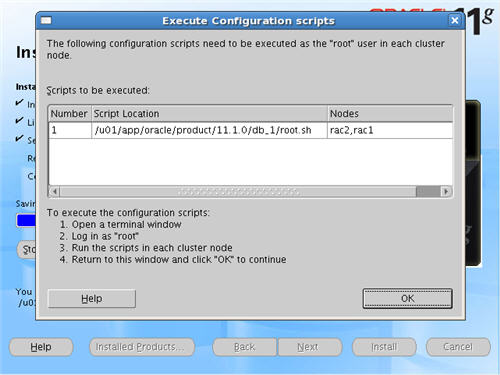

Execute the "root.sh" scripts on both nodes, as instructed on the "Execute Configuration scripts" screen, then click the "OK" button.

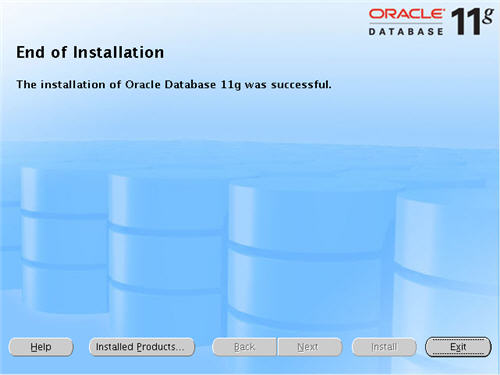

When the installation is complete, click the "Exit" button to leave the installer.

Create a Database using the DBCA

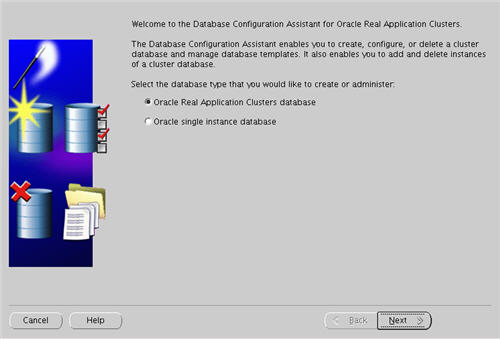

Login to RAC1 as the oracle user and start the Database Configuration Assistant.

dbca

On the "Welcome" screen, select the "Oracle Real Application Clusters database" option and click the "Next" button.

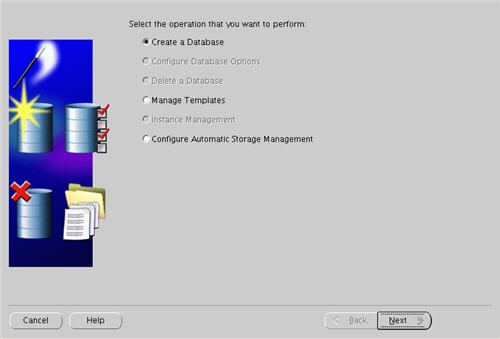

Select the "Create a Database" option and click the "Next" button.

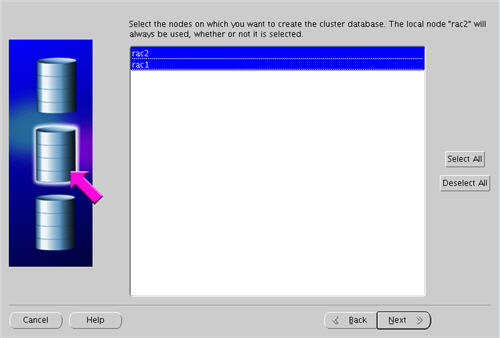

Highlight both RAC nodes and click the "Next" button.

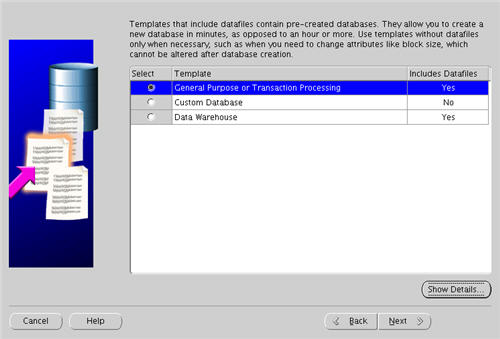

Select the "General Purpose or Transaction Processing" option and click the "Next" button.

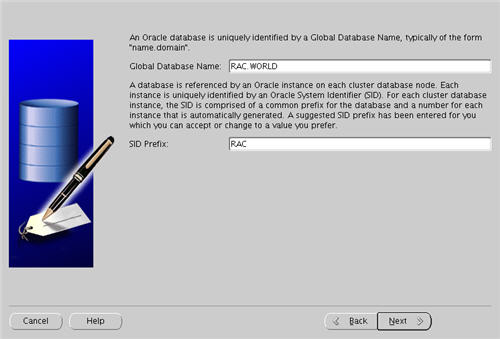

Enter the values "RAC.WORLD" and "RAC" for the Global Database Name and SID Prefix respectively, then click the "Next" button.

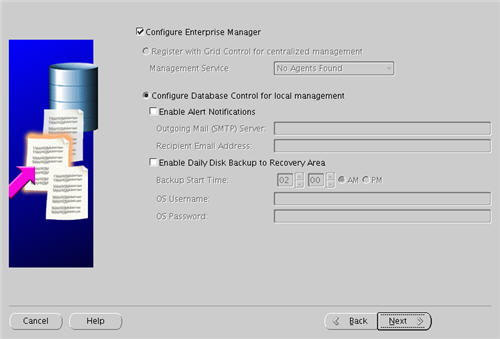

Accept the management options by clicking the "Next" button. If you are attempting the installation on a server with limited memory, you may prefer not to configure Enterprise Manager at this time.

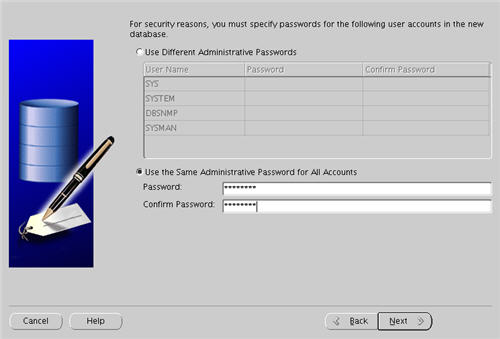

Enter database passwords then click the "Next" button.

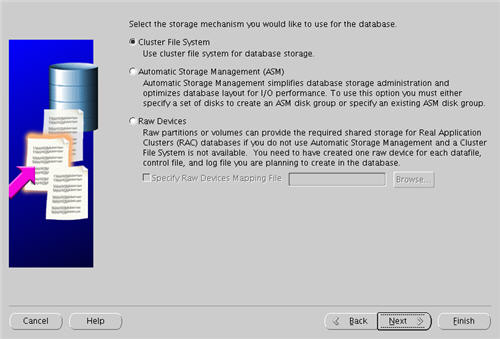

Select the "Cluster File System" option, then click the "Next" button.

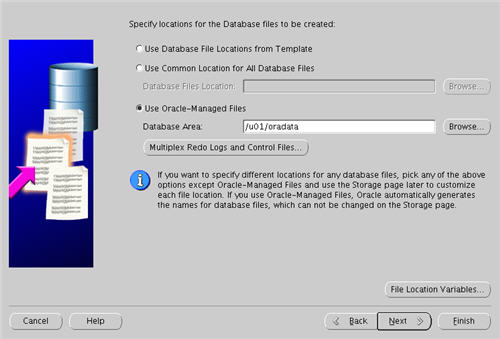

Select the "Use Oracle-Managed Files" option and enter "/u01/oradata/" as the database location, then click the "Next" button.

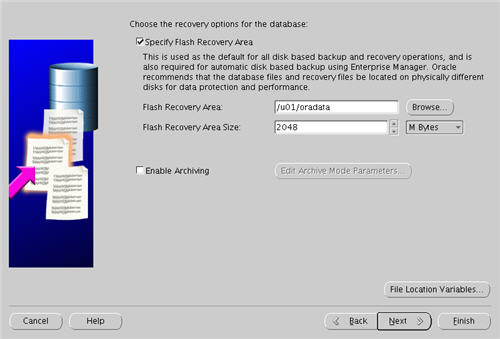

Check the "Specify Flash Recovery Area" option and and enter "/u01/oradata/" as the location, then click the "Next" button. Note.I wouldn't normally expect to see the flash recovery area and datafiles in the same location, but this is only a test.

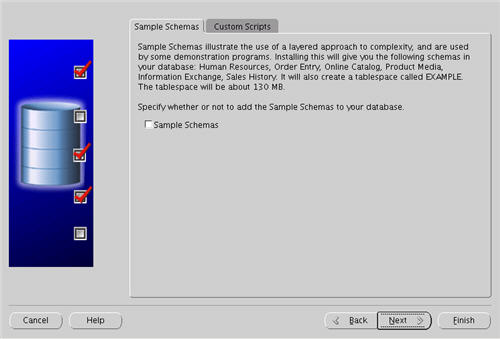

Accept the database content settings by clicking the "Next" button.

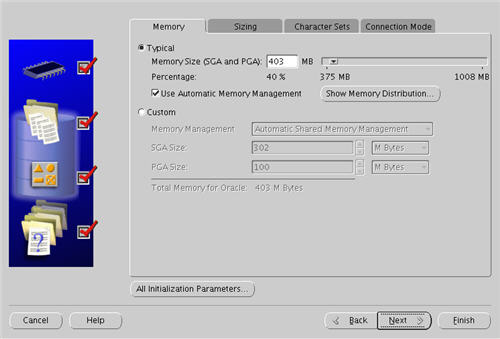

Accept the "Typical" memory management option by clicking the "Next" button.

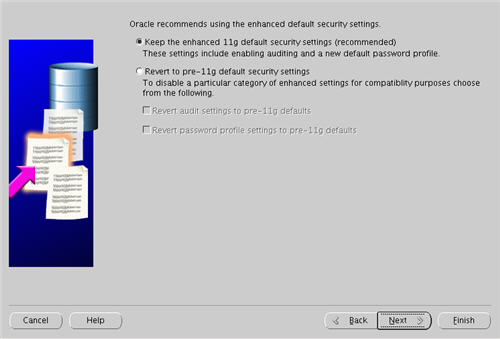

Accept the 11g enhanced security settings by clicking the "Next" button.

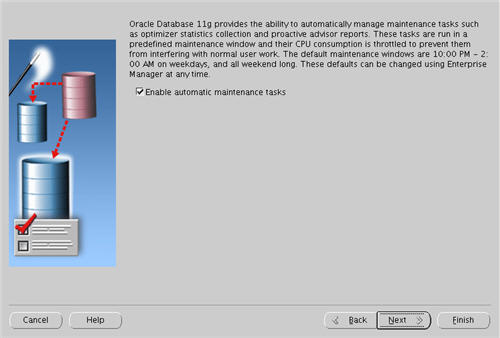

Accept the automatic maintenance tasks by clicking the "Next" button.

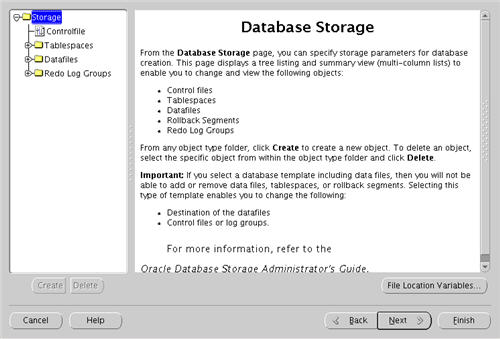

Accept the database storage settings by clicking the "Next" button.

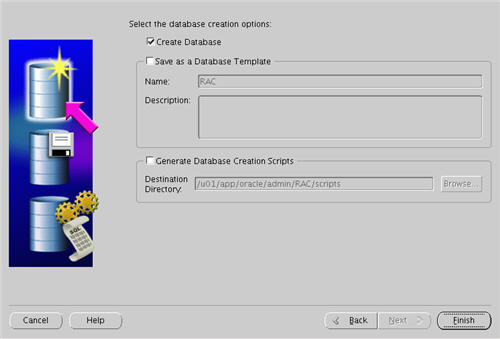

Accept the database creation options by clicking the "Finish" button.

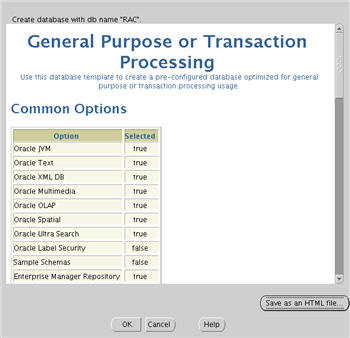

Accept the summary information by clicking the "OK" button.

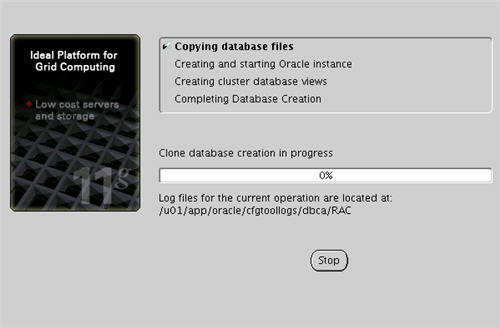

Wait while the database is created.

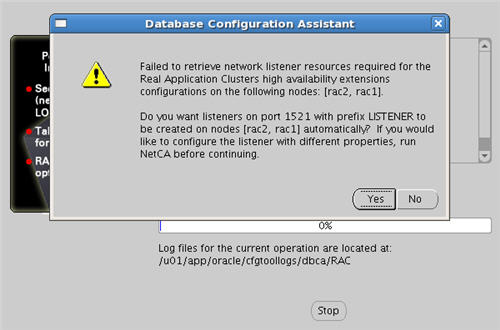

When prompted, click the "Yes" button to configure the listeners with the default settings.

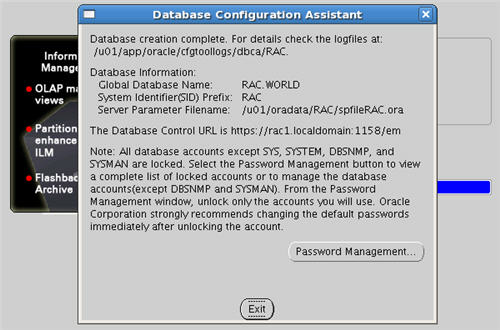

Once the database creation is complete you are presented with the following screen. Make a note of the information on the screen and click the "Exit" button.

The RAC database creation is now complete.

TNS Configuration

Once the installation is complete, the "$ORACLE_HOME/network/admin/listener.ora" file in the shared $ORACLE_HOME will contain the following entries.

# listener.ora Network Configuration File: /u01/app/oracle/product/11.1.0/db_1/network/admin/listener.ora

# Generated by Oracle configuration tools.

LISTENER_RAC2 =

(DESCRIPTION_LIST =

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac2-vip)(PORT = 1521)(IP = FIRST))

)

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = 10.1.10.202)(PORT = 1521)(IP = FIRST))

)

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = IPC)(KEY = EXTPROC))

)

)

)

LISTENER_RAC1 =

(DESCRIPTION_LIST =

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac1-vip)(PORT = 1521)(IP = FIRST))

)

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = 10.1.10.201)(PORT = 1521)(IP = FIRST))

)

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = IPC)(KEY = EXTPROC))

)

)

)

The shared "$ORACLE_HOME/network/admin/tnsnames.ora" file will contain the following entries.

# tnsnames.ora Network Configuration File: /u01/app/oracle/product/11.1.0/db_1/network/admin/tnsnames.ora

# Generated by Oracle configuration tools.

RAC =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac1-vip)(PORT = 1521))

(ADDRESS = (PROTOCOL = TCP)(HOST = rac2-vip)(PORT = 1521))

(LOAD_BALANCE = yes)

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = RAC.WORLD)

)

)

LISTENERS_RAC =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac1-vip)(PORT = 1521))

(ADDRESS = (PROTOCOL = TCP)(HOST = rac2-vip)(PORT = 1521))

)

RAC2 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac2-vip)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = RAC.WORLD)

(INSTANCE_NAME = RAC2)

)

)

RAC1 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac1-vip)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = RAC.WORLD)

(INSTANCE_NAME = RAC1)

)

)

This configuration allows direct connections to specific instance, or using a load balanced connection to the main service.

$ sqlplus / as sysdba SQL*Plus: Release 11.1.0.6.0 - Production on Tue Aug 19 16:54:45 2008 Copyright (c) 1982, 2007, Oracle. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.1.0.6.0 - Production With the Partitioning, Real Application Clusters, OLAP, Data Mining and Real Application Testing options SQL> CONN sys/password@rac1 AS SYSDBA Connected. SQL> SELECT instance_name, host_name FROM v$instance; INSTANCE_NAME HOST_NAME ---------------- ---------------------------------------------------------------- RAC1 rac1.lynx.co.uk SQL> CONN sys/password@rac2 AS SYSDBA Connected. SQL> SELECT instance_name, host_name FROM v$instance; INSTANCE_NAME HOST_NAME ---------------- ---------------------------------------------------------------- RAC2 rac2.lynx.co.uk SQL> CONN sys/password@rac AS SYSDBA Connected. SQL> SELECT instance_name, host_name FROM v$instance; INSTANCE_NAME HOST_NAME ---------------- ---------------------------------------------------------------- RAC1 rac1.lynx.co.uk SQL>

Check the Status of the RAC

There are several ways to check the status of the RAC. The srvctl utility shows the current configuration and status of the RAC database.

$ srvctl config database -d RAC rac1 RAC1 /u01/app/oracle/product/11.1.0/db_1 rac2 RAC2 /u01/app/oracle/product/11.1.0/db_1 $ $ srvctl status database -d RAC Instance RAC1 is running on node rac1 Instance RAC2 is running on node rac2 $

The V$ACTIVE_INSTANCES view can also display the current status of the instances.

$ sqlplus / as sysdba

SQL*Plus: Release 11.1.0.6.0 - Production on Tue Aug 19 16:55:31 2008

Copyright (c) 1982, 2007, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.1.0.6.0 - Production

With the Partitioning, Real Application Clusters, OLAP, Data Mining

and Real Application Testing options

SQL> SELECT * FROM v$active_instances;

INST_NUMBER INST_NAME

----------- ------------------------------------------------------------

1 rac1.lynx.co.uk:RAC1

2 rac2.lynx.co.uk:RAC2

SQL>

Finally, the GV$ allow you to display global information for the whole RAC.

SQL> SELECT inst_id, username, sid, serial# FROM gv$session WHERE username IS NOT NULL;

INST_ID USERNAME SID SERIAL#

---------- ------------------------------ ---------- ----------

2 SYS 116 841

2 SYSMAN 118 78

2 SYS 119 1992

2 SYSMAN 121 1

2 SYSMAN 122 29

2 SYS 123 2

2 SYSMAN 124 50

2 DBSNMP 129 1

2 DBSNMP 130 6

2 DBSNMP 134 1

2 SYSMAN 145 53

INST_ID USERNAME SID SERIAL#

---------- ------------------------------ ---------- ----------

2 SYS 170 14

1 SYSMAN 117 144

1 SYSMAN 118 186

1 SYSMAN 119 31

1 SYS 121 3

1 SYSMAN 122 162

1 SYSMAN 123 99

1 DBSNMP 124 3

1 SYS 125 2

1 SYS 126 19

1 SYS 127 291

INST_ID USERNAME SID SERIAL#

---------- ------------------------------ ---------- ----------

1 DBSNMP 131 61

1 SYS 170 17

24 rows selected.

SQL>

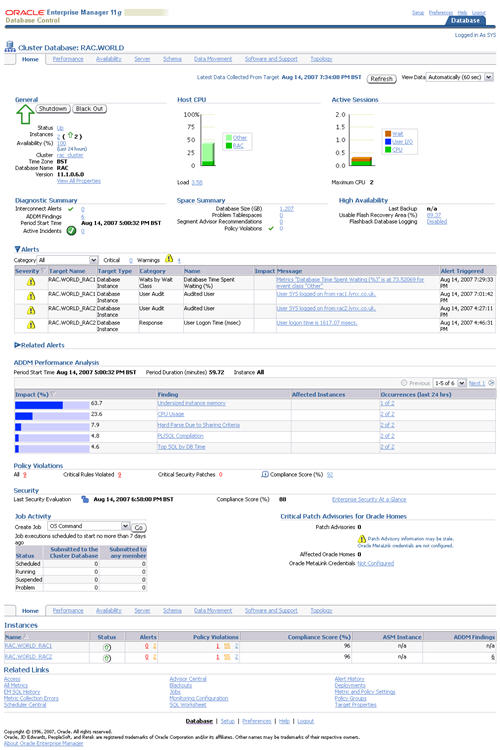

If you have configured Enterprise Manager, it can be used to view the configuration and current status of the database using a URL like "https://rac1.localdomain:1158/em".

Direct NFS Client

For improved NFS performance, Oracle recommend using the Direct NFS Client shipped with Oracle 11g. The direct NFS client looks for NFS details in the following locations.

- $ORACLE_HOME/dbs/oranfstab

- /etc/oranfstab

- /etc/mtab

Since we already have our NFS mount point details in the "/etc/fstab", and therefore the "/etc/mtab" file also, there is no need to configure any extra connection details.

For the client to work we need to switch the libodm11.so library for the libnfsodm11.so library, as shown below.

srvctl stop database -d RAC cd $ORACLE_HOME/lib mv libodm11.so libodm11.so_stub ln -s libnfsodm11.so libodm11.so srvctl start database -d RAC

With the configuration complete, you can see the direct NFS client usage via the following views.

- v$dnfs_servers

- v$dnfs_files

- v$dnfs_channels

- v$dnfs_stats

For example.

SQL> SELECT svrname, dirname FROM v$dnfs_servers; SVRNAME DIRNAME ------------- ----------------- nas1 /shared_data SQL>

The Direct NFS Client supports direct I/O and asynchronous I/O by default.

For more information see:

- Oracle 11g Release 1 RAC On Linux Using VMware Server

- Clusterware Installation Guide for Linux

- Real Application Clusters Installation Guide for Linux and UNIX

- Oracle Database Installation Guide 11g Release 1 (11.1) for Linux

- Direct and Asynchronous I/O

Hope this helps. Regards Tim...