8i | 9i | 10g | 11g | 12c | 13c | 18c | 19c | 21c | 23ai | Misc | PL/SQL | SQL | RAC | WebLogic | Linux

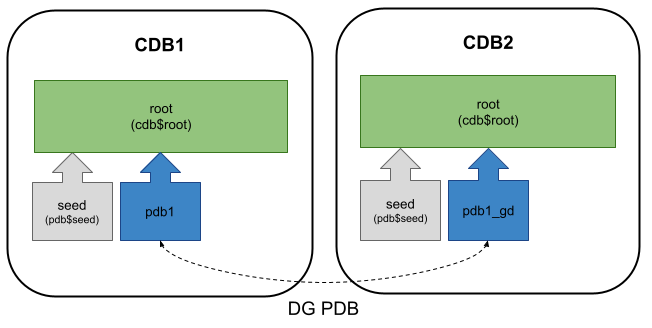

DG PDB : Oracle Data Guard per Pluggable Database in Oracle Database 21c (21.7 Onward)

DG PDB is a new feature introduced in Oracle database 21c (21.7) which allows PDB-level Data Guard protection. This article describes a basic setup of DG PDB.

Thanks to Fernando Simon, who spotted some of the issues, which saved me a lot of time and headaches. You should read his post on this feature here.

Related articles.

- Data Guard : The PREPARE DATABASE FOR DATA GUARD Command in Oracle Database 21c

- Read-Only Oracle Homes in Oracle Database 18c

- Data Guard Quick Links : 11gR2, 12cR1, 12cR2, 18c, 19c, 21c, All Articles

Assumptions

This article assumes the following infrastructure is in place before starting.

- We have two servers (physical or VMs) with Oracle 21.7 installed on them. The Oracle installations must be patched to 21.7, or this functionality will not work.

- Source CDB: cdb1 running on ol8-21-dgpdb1.

- Standby CDB: cdb2 running on ol8-21-dgpdb2.

- Source PDB: pdb1 running inside cdb1.

- Standby PDB: We will be creating pdb1_dg in cdb2 during this process, so we start with no PDB in the standby CDB. We could use the same PDB name on both instances, but for an article it's easier to describe it if the PDB names are different between the two instances

- Both CDBs could contain additional PDBs that are either not protected by DG PDB, or have separate DG PDB configurations. In this example we are keeping the setup simple by excluding additional PDBs.

In addition to the normal database prerequisites, we have installed the sshpass package.

dnf install -y sshpass

These environment variables will be used for many of the setup scripts. Looking at their values may help understand what we are doing here. Adjust as required, but be consistent.

# Hosts

export DOMAIN_NAME=localdomain

export NODE1_HOSTNAME=ol8-21-dgpdb1

export NODE2_HOSTNAME=ol8-21-dgpdb2

export NODE1_FQ_HOSTNAME=${NODE1_HOSTNAME}.${DOMAIN_NAME}

export NODE2_FQ_HOSTNAME=${NODE2_HOSTNAME}.${DOMAIN_NAME}

# Paths

export ORACLE_BASE=/u01/app/oracle

export ORA_INVENTORY=/u01/app/oraInventory

export ORACLE_HOME_EXT=product/21.0.0/dbhome_1

export READONLY_HOME=${ORACLE_BASE}/homes/OraDB21Home1

export DATA_DIR=/u01/oradata

# Databases

export NODE1_ORACLE_SID=cdb1

export NODE1_DB_NAME=${NODE1_ORACLE_SID}

export NODE1_PDB_NAME=pdb1

export NODE1_DB_UNIQUE_NAME=${NODE1_ORACLE_SID}

export NODE2_ORACLE_SID=cdb2

export NODE2_DB_NAME=${NODE2_ORACLE_SID}

export NODE2_PDB_NAME=pdb1_dg

export NODE2_DB_UNIQUE_NAME=${NODE2_ORACLE_SID}

# Passwords

export ORACLE_PASSWORD=oracle

export SYS_PASSWORD="SysPassword1"

export PDB_PASSWORD="PdbPassword1"

export DGPDB_INT_PASSWORD="DgpdbIntPassword1"

Some of the prerequisite scripts below reference these environment variables, which are expanded out in the subsequent command and configuration files. If you are running the commands manually, rather than as scripts, remember to manually expand the commands with the relevant values before running them.

There is a Vagrant build here, which includes the build of the servers, the database software installations, database creations and the perquisites, so you can jump straight to the DG PDB configuration section.

Prerequisites

Enable Oracle Managed Files (OMF) on both instances.

sqlplus / as sysdba <<EOF

alter system set db_create_file_dest='${DATA_DIR}';

alter system set db_create_online_log_dest_1='${DATA_DIR}';

-- Do addition log destination for multiplexing.

--alter system set db_create_online_log_dest_2='${DATA_DIR}';

exit;

EOF

The "tnsnames.ora" files for both instances contain the following entries.

CDB1.WORLD =

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = ol8-21-dgpdb1.localdomain)(PORT = 1521))

)

(CONNECT_DATA =

(SERVER = DEDICATED)

(SID = cdb1)

)

)

CDB2.WORLD =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = ol8-21-dgpdb2.localdomain)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = cdb2.world)

)

)

Prepare both instances for Data Guard. This sets all necessary initialisation parameters, sets varous database states and enables the broker. You can read what it does here.

# Node 1

dgmgrl / <<EOF

prepare database for data guard

with db_unique_name is ${NODE1_DB_UNIQUE_NAME}

db_recovery_file_dest is "${ORACLE_BASE}/fast_recovery_area"

db_recovery_file_dest_size is 20g;

exit;

EOF

# Node 2

dgmgrl / <<EOF

prepare database for data guard

with db_unique_name is ${NODE2_DB_UNIQUE_NAME}

db_recovery_file_dest is "${ORACLE_BASE}/fast_recovery_area"

db_recovery_file_dest_size is 20g;

exit;

EOF

Some of the Data Guard configuration will fail unless we use a wallet to hold the credentials, allowing us to connect without having to specify credentials manually. Add a wallet location into the "sqlnet.ora" file on both nodes.

cat >> ${READONLY_HOME}/network/admin/sqlnet.ora <<EOF

NAMES.DIRECTORY_PATH= (TNSNAMES, EZCONNECT)

SQLNET.WALLET_OVERRIDE = true

WALLET_LOCATION =

(

SOURCE =

(METHOD = FILE)

(METHOD_DATA =

(DIRECTORY = ${READONLY_HOME}/wallet)

)

)

EOF

Restart the listener on both nodes.

lsnrctl stop lsnrctl start

Create a wallet on the source database.

mkdir -p ${READONLY_HOME}/wallet

mkstore -wrl ${READONLY_HOME}/wallet -createALO

mkstore -wrl ${READONLY_HOME}/wallet -createCredential ${NODE1_ORACLE_SID} sys ${SYS_PASSWORD}

mkstore -wrl ${READONLY_HOME}/wallet -createCredential ${NODE2_ORACLE_SID} sys ${SYS_PASSWORD}

mkstore -wrl ${READONLY_HOME}/wallet -listCredential

Copy the wallet to the second node. These commands are run from the second node.

mkdir -p ${READONLY_HOME}/wallet

ssh-keyscan -H ${NODE1_FQ_HOSTNAME} >> ~/.ssh/known_hosts

ssh-keyscan -H ${NODE2_FQ_HOSTNAME} >> ~/.ssh/known_hosts

echo ${ORACLE_PASSWORD} > /tmp/temp1.txt

sshpass -f /tmp/temp1.txt scp ${NODE1_FQ_HOSTNAME}:${READONLY_HOME}/wallet/* ${READONLY_HOME}/wallet

rm /tmp/temp1.txt

Check you can connect to the source and target databases from each node without needing a password. If this doesn't work there is no point trying to move forward.

sqlplus /@cdb1 as sysdba sqlplus /@cdb2 as sysdba

DG PDB Configuration

Create a configuration for each instance.

# Node 1 dgmgrl /@cdb1 <<EOF create configuration my_dg_config1 as primary database is cdb1 connect identifier is cdb1; exit; EOF # Node 2 dgmgrl /@cdb2 <<EOF create configuration my_dg_config2 as primary database is cdb2 connect identifier is cdb2; exit; EOF

Add the the configuration for the standby to the source database configuration.

dgmgrl /@cdb1 <<EOF add configuration my_dg_config2 connect identifier is cdb2; exit; EOF

Enable the configurations on both nodes.

# Node 1 dgmgrl /@cdb1 <<EOF enable configuration all; exit; EOF # Node 2 dgmgrl /@cdb2 <<EOF enable configuration all; exit; EOF

Connect to the standby CDB and add the pluggable database we want to protect to the configuration on the second node. It will prompt

for the DGPDB_INT database user password. The first time we call the command it will fail on the standby node, but it works when we issue the command a second time.

dgmgrl /@cdb2 DGMGRL> add pluggable database pdb1_dg at cdb2 source is pdb1 at cdb1 pdbfilenameconvert is "'/CDB1/pdb1/','/CDB2/pdb1_dg/'"; Connected to "cdb1" Enter password for DGPDB@cdb1: Connected to "cdb2" Enter password for DGPDB@cdb2: ORA-17628: Oracle error 11402 returned by remote Oracle server ORA-11402: Oracle Data Guard site entry not found DGMGRL> DGMGRL> add pluggable database pdb1_dg at cdb2 source is pdb1 at cdb1 pdbfilenameconvert is "'/CDB1/pdb1/','/CDB2/pdb1_dg/'"; Connected to "cdb1" Connected to "cdb2" Pluggable Database "PDB1_DG" added DGMGRL>

Show the configuration of the PDBs on the two nodes.

DGMGRL> show pluggable database pdb1 at cdb1; Connected to "cdb1" Pluggable database 'pdb1' at database 'cdb1' Data Guard Role: Primary Con_ID: 3 Active Target: con_id 4 at cdb2 Pluggable Database Status: SUCCESS DGMGRL> show pluggable database pdb1_dg at cdb2; Pluggable database 'pdb1_dg' at database 'cdb2' Data Guard Role: Physical Standby Con_ID: 4 Source: con_id 3 at cdb1 Transport Lag: (unknown) Intended State: APPLY-ON Apply State: Not Running Pluggable Database Status: DGM-5103: one or more data files were not found ORA-16766: Redo Apply is stopped DGMGRL>

Notice the standby PDB currently have no datafiles. We have to manually copy those between the servers. There are various ways to do this, including RMAN datafile copies, the DBMS_FILE_TRANSFER package and SCP. In this example we will use SCP.

The following commands copy the pdb1 datafiles to the correct location on the second node for the pdb1_dg database.

mkdir -p /u01/oradata/CDB2/pdb1_dg/

echo ${ORACLE_PASSWORD} > /tmp/temp1.txt

sshpass -f /tmp/temp1.txt scp ${NODE1_FQ_HOSTNAME}:/u01/oradata/CDB1/pdb1/* /u01/oradata/CDB2/pdb1_dg/

rm /tmp/temp1.txt

ls /u01/oradata/CDB2/pdb1_dg/

We enable the configuration again on the second node.

dgmgrl /@cdb2 <<EOF enable configuration all; exit; EOF

Now we can see pdb1_dg has a normal standby status.

dgmgrl /@cdb2 DGMGRL> show pluggable database pdb1_dg at cdb2; Pluggable database 'pdb1_dg' at database 'cdb2' Data Guard Role: Physical Standby Con_ID: 4 Source: con_id 3 at cdb1 Transport Lag: (unknown) Intended State: APPLY-ON Apply State: Running Apply Instance: cdb2 Average Apply Rate: (unknown) Real Time Query: OFF Pluggable Database Status: SUCCESS DGMGRL>

Switchover PDB

A switchover is a controlled role change between the primary and standby PDBs. There is nothing wrong with the primary PDB, but we want to convert the standby PDB into the primary PDB.

Connect to the current primary database and switchover the pluggable database.

dgmgrl /@cdb1 DGMGRL> switchover to pluggable database pdb1_dg at cdb2; Verifying conditions for Switchover... Connected to "cdb2" Connected to "cdb1" Source pluggable database is 'PDB1' at database 'cdb1' Performing switchover NOW, please wait... Closing pluggable database 'PDB1'... Switching 'PDB1' to standby role... Connected to "cdb2" Waiting for 'PDB1_DG' to recover all redo data... Stopping recovery at 'PDB1_DG'... Converting 'PDB1_DG' to primary role... Opening new primary 'PDB1_DG'... Connected to "cdb1" Waiting for redo data from new primary 'PDB1_DG'... Starting recovery at new standby 'PDB1'... Switchover succeeded, new primary is "PDB1_DG" DGMGRL>

Checking the status of the two configurations shows us the roles have changed.

DGMGRL> show pluggable database pdb1 at cdb1; Pluggable database 'pdb1' at database 'cdb1' Data Guard Role: Physical Standby Con_ID: 3 Source: con_id 4 at cdb2 Transport Lag: (unknown) Intended State: APPLY-ON Apply State: Running Apply Instance: cdb1 Average Apply Rate: (unknown) Real Time Query: OFF Pluggable Database Status: SUCCESS DGMGRL> DGMGRL> show pluggable database pdb1_dg at cdb2; Connected to "cdb2" Pluggable database 'pdb1_dg' at database 'cdb2' Data Guard Role: Primary Con_ID: 4 Active Target: con_id 3 at cdb1 Pluggable Database Status: SUCCESS DGMGRL>

We can do a switchover to return the roles to their original settings.

dgmgrl /@cdb2 DGMGRL> switchover to pluggable database pdb1 at cdb1; Verifying conditions for Switchover... Connected to "cdb1" Connected to "cdb2" Source pluggable database is 'PDB1_DG' at database 'cdb2' Performing switchover NOW, please wait... Closing pluggable database 'PDB1_DG'... Switching 'PDB1_DG' to standby role... Connected to "cdb1" Waiting for 'PDB1' to recover all redo data... Stopping recovery at 'PDB1'... Converting 'PDB1' to primary role... Opening new primary 'PDB1'... Connected to "cdb2" Waiting for redo data from new primary 'PDB1'... Starting recovery at new standby 'PDB1_DG'... Switchover succeeded, new primary is "PDB1" DGMGRL>

Sometimes the switchover will hang, waiting for redo transfer. Sometimes this can be fixed by forcing a logfile switch on the database that was the primary when the switchover command was issued. Sometimes this doesn't work though.

# Node 1 sqlplus /@cdb1 as sysdba <<EOF alter system archive log current; exit; EOF # Node 2 sqlplus /@cdb2 as sysdba <<EOF alter system archive log current; exit; EOF

Always check the redo apply status using the "show pluggable database {pdb-name} at {cdb-name};" command. You may need to manually turn on the redo apply for the standby database on the relevant node.

# Node 1 dgmgrl /@cdb1 <<EOF edit pluggable database pdb1 at cdb1 set state=apply-on; exit; EOF # Node 2 dgmgrl /@cdb2 <<EOF edit pluggable database pdb1_dg at cdb2 set state=apply-on; exit; EOF

Failover PDB

A failover is only performed when there is something wrong with the primary PDB and we need to force the standby to become the primary.

Connect to the standby database and failover the pluggable database.

dgmgrl /@cdb2 DGMGRL> failover to pluggable database pdb1_dg AT cdb2; Verifying conditions for Failover... Connected to "cdb2" Connected to "cdb1" Source pluggable database is 'PDB1' at database 'cdb1' Performing failover NOW, please wait... Closing pluggable database 'PDB1'... Converting 'PDB1' to standby role... Connected to "cdb2" Waiting for 'PDB1_DG' to recover all redo data... Stopping recovery at 'PDB1_DG'... Converting 'PDB1_DG' to primary role... Opening new primary 'PDB1_DG'... Failover succeeded, new primary is "PDB1_DG". DGMGRL>

The original primary PDB is not automatically initiated as a standby PDB. Assuming there is nothing fundamentally wrong with the PDB, which caused the failover in the first place, we can convert it to a standby by turning on redo apply.

DGMGRL> edit pluggable database pdb1 at cdb1 set state=apply-on; Succeeded. DGMGRL>

Thoughts

- This is new functionality and feels a little buggy to me. You may want to wait a couple of versions before using this for something real.

- Rather than doing Data Gard for PDBs, I would prefer to have instances containing those PDBs that must be protected by Data Guard, and separate instances containing those PDBs that do not need data guard protection. That way I can just use regular data guard.

- Always check the redo apply status of the standby PDB before initiating a switchover or failover. If there is something wrong with the redo apply, you might get stuck without a working primary.

- Unlike failover at the CDB level, failover of a PDB seems to only work if both PDBs are working normally. If you turn off the primary instance and attempt to failover to the standby PDB, the "Verifying conditions for Failover" section will fail. Adding the "immediate" keyword to the end of the failover command is meant to force the failover, but although it no longer has the connection error, it may still complain about the lack of redo apply. It doesn't feel as robust as CDB data guard.

- A few times I got myself into such a mess, the easiest thing to do was to rebuild the test environment. That's not a great situation to be in when using a HA/DR feature.

For more information see:

- Scenarios for Using DGMGRL with a DG PDB Configuration

- Data Guard : The PREPARE DATABASE FOR DATA GUARD Command in Oracle Database 21c

- Read-Only Oracle Homes in Oracle Database 18c

- Data Guard Quick Links : 11gR2, 12cR1, 12cR2, 18c, 19c, 21c, All Articles

Hope this helps. Regards Tim...