8i | 9i | 10g | 11g | 12c | 13c | 18c | 19c | 21c | 23ai | Misc | PL/SQL | SQL | RAC | WebLogic | Linux

Home » Articles » Linux » Here

Docker : An Oracle DBA's Guide to Docker

This article gives a basic introduction to some Docker concepts, focusing on those areas that are likely to interest Oracle DBAs.

- None of This Will Make Sense

- Virtual Machines vs Containers

- Layers, Images and Containers

- Generic Images

- Smaller is Better

- Never Connect to Your Container

- Never Do Manual Installations or Configuration

- Don't Upgrade. Rebuild

- Never Run Apps as Root

- The Kernel is Shared

- Can You Trust Pre-Defined Images?

- Docker for Oracle Databases

- Purest or Not?

Related articles.

None of This Will Make Sense

I think many people will be confused by some of the concepts of Docker without spending some time playing with it. Over the years I had read a lot of blog posts and seen a number of presentations and really thought I understood what Docker was all about. Within a few minutes of using it, I realised all my ideas about it were wrong.

My advice would be to start using it, then make up your mind if it is right for you. If my experience is anything to go by, trying to do it the other way round won't work. None of this will make sense until you've tried it! :)

Virtual Machines vs Containers

Virtualization is well established in the data center these days. Most cloud services are run inside virtual machines and most companies use virtualization in their own data centers. As a result, the pros and cons of virtualization are reasonably well know amongst Oracle DBAs and developers. If you need more background in this area you might want to read this.

For those who have had experience of Solaris Zones or Linux Containers, they will have an idea of what containers do, but that idea may be a little different to the focus of Docker. For example, you may have used Solaris Zones to act like lightweight virtualization, allowing you to cram more workload on to the hardware than was possible with virtualization. To understand this, let's describe some basic differences between virtualization and containers.

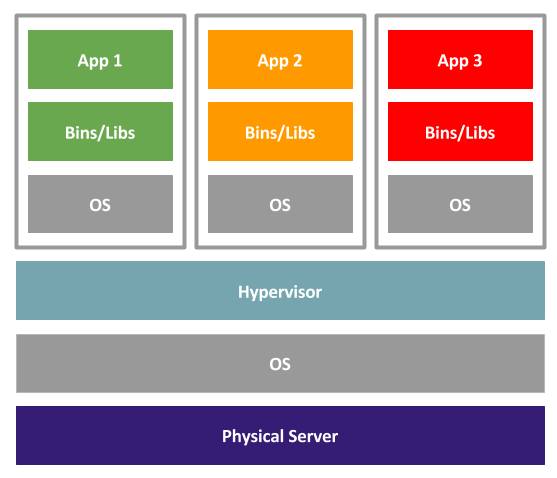

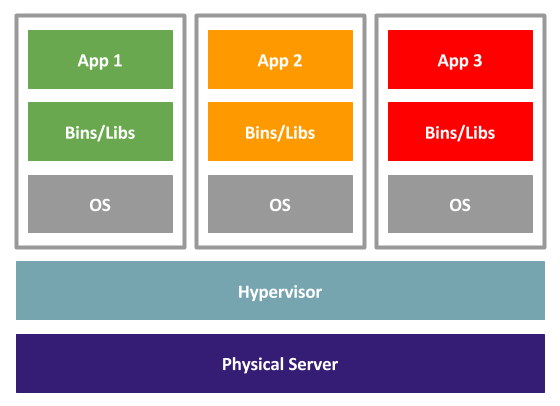

A type 2 hypervisor runs on top of a host operating system, dividing up resources to allow multiple virtual machines to run, each with a complete operating system installation. These type 2 hypervisors are commonly found on desktops, not in the data center.

A type 1 hypervisor installs on the bare metal, but essentially performs the same operations as a type 2 hypervisor. There is still a full operating system installed on each VM. The hypervisor can optimize the way the physical resources are used, but ultimately you will have a full operating system running for each VM, with all the overheads that entails.

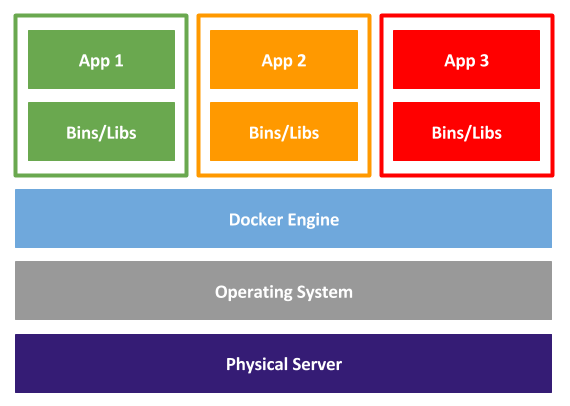

When using containers, there is a single operating system installed on the physical hardware, which each container makes use of. In the case of linux containers, you are separating processes, but each container is using the same OS. In the case of Docker on Linux, each container shares the same kernel, but contains the libraries and binaries necessary to make the container look like the Linux distribution of your choice, as well as all the libraries and binaries necessary to run the application inside the container.

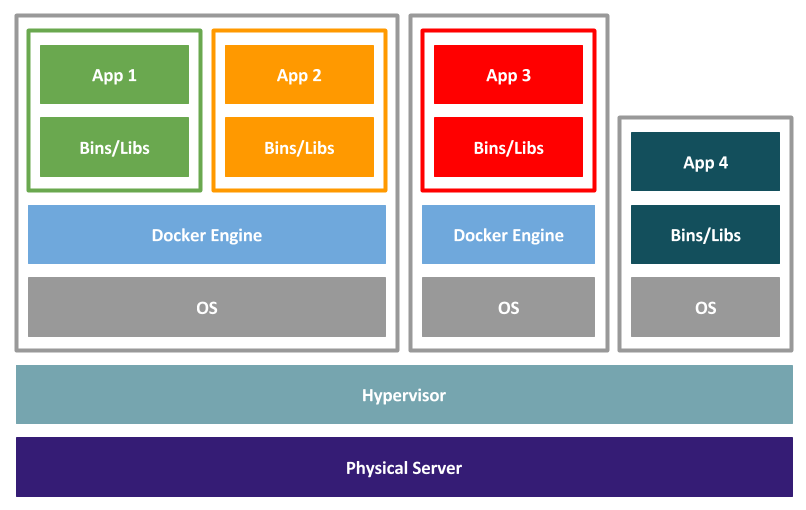

There is of course nothing to stop you running containers inside a virtual machine. The VMware implementation of containers does exactly that. Each container runs inside a very small footprint VM, which gives the complete isolation of a VM, but the lightweight feel of Docker. For your own data center you may end up with a hybrid model that mixes virtualization and containerization.

After viewing this it's very easy to think of Docker as lightweight virtualization, which is exactly what I did before I started to use it, but this is a mistake. Docker is focused on application delivery, with each container fulfilling a specific role. This is unlike virtualization, where you move your monolithic application from physical to a VM and continue to work in the same way.

There are lots of comparison lists you can Google that show differing degrees of bias. I'll just list a few points that stand out to me.

| Virtual Machine | Container |

|---|---|

| Full OS per VM. | Shared OS (kernel). |

| Higher Overhead. | Lower Overhead. |

| High level of isolation. Considered more secure. | Lower level of isolation. Considered less secure. |

| General purpose. | Application focussed. |

| Slow deploy, boot, drop. | Quick deploy, boot, drop. |

| Normal storage usage. Thin provisioning possible. | Copy-on-write. Only changes from base image stored. |

Layers, Images and Containers

You should probably start by reading this.

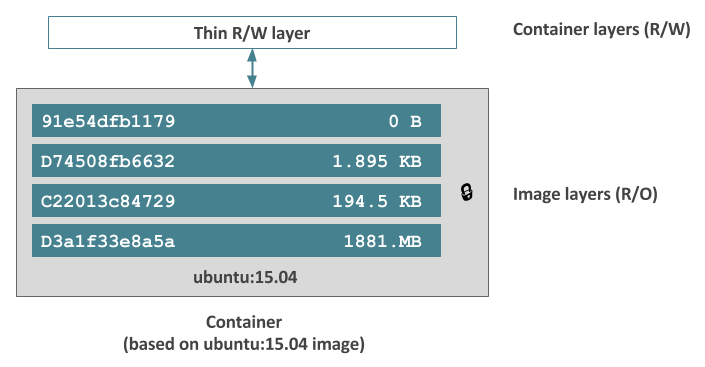

A Dockerfile is a series of commands or operations that define how to build an image. The image is built up of read-only layers, with each instruction in the Dockerfile resulting in a new read-only layer, based on the previous layer. The resulting image is also read-only.

We can see the current tagged images using the docker images command, and the layers that make up the image using the docker history command.

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ol7_ords latest c58888e8108f 22 hours ago 1.31GB

ol7_122 latest cbf1f0c439de 22 hours ago 19.3GB

ubuntu latest 00fd29ccc6f1 3 weeks ago 111MB

oraclelinux 7-slim 9870bebfb1d5 5 weeks ago 118MB

$

$ docker history ol7_ords:latest

IMAGE CREATED CREATED BY SIZE COMMENT

c58888e8108f 21 hours ago /bin/sh -c #(nop) CMD ["/bin/sh" "-c" "ex... 0B

0f95c87445f0 21 hours ago /bin/sh -c #(nop) HEALTHCHECK &{["CMD-SHE... 0B

b61c58611f2c 21 hours ago /bin/sh -c #(nop) EXPOSE 8080/tcp 8443/tcp 0B

8667a2e3907a 21 hours ago /bin/sh -c #(nop) USER [tomcat] 0B

6fee5d634e37 21 hours ago /bin/sh -c yum -y install unzip tar gzip ... 812MB

0ddf4d03bea9 21 hours ago /bin/sh -c #(nop) COPY multi:9c4932d7567d2... 11kB

8fecd958a3e9 21 hours ago /bin/sh -c #(nop) COPY multi:55788e282f198... 379MB

dcf84bb5cfda 21 hours ago /bin/sh -c #(nop) ENV DB_HOSTNAME=ol7-122... 0B

b0354cd8480a 21 hours ago /bin/sh -c #(nop) ENV JAVA_SOFTWARE=jdk-8... 0B

53e284313b73 21 hours ago /bin/sh -c #(nop) LABEL maintainer=tim@or... 0B

9870bebfb1d5 5 weeks ago /bin/sh -c #(nop) CMD ["/bin/bash"] 0B

<missing> 5 weeks ago /bin/sh -c #(nop) ADD file:9061281688fde55... 118MB

<missing> 2 months ago /bin/sh -c #(nop) MAINTAINER Oracle Linux... 0B

$

A container is a running instance of an image, but the container also has its own read-write layer, so it can make changes to the files presented from the base image. The file system changes made by the container are handled using copy-on-write, so only those files that are changed from the base image are stored.

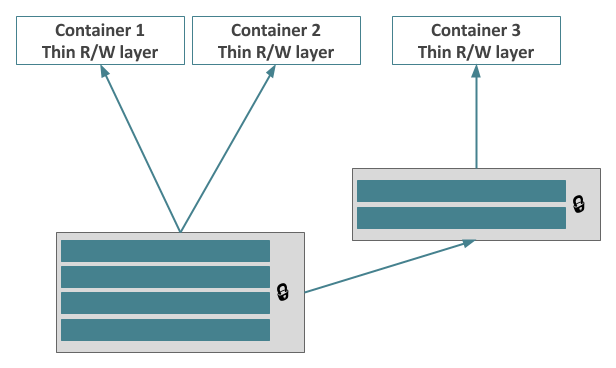

This means multiple containers can share the same base image, without having to duplicate the entire storage associated with that image. It also means new images can be based on existing common layers. This can result in substantial space savings when you have many similar containers running.

Generic Images

The use of layers, images and copy-on-write means there is a strong emphasis on building generic images that can be reused. To achieve this you will often see Dockerfiles that build a generic image, and perform all container-specific configuration the first time the container is run, using environment variables and mounted volumes to influence the container-specific configuration.

When you start defining your own builds, it's worth keeping this separation in mind, or you will end up with many similar images, which will waste a lot of space.

Smaller is Better

As DBAs we are used to having quite a lot of software installed on our operating systems. A typical Linux database VM might include a window manager, like GNOME, an assortment of development tools and editors, as well as a number of operating system performance and diagnostic packages. With all the required dependencies, this can make the operating system quite large.

When building Docker images, there is a strong emphasis on keeping the images as small as possible. Typically images are based on a cut down version of the OS. In my case I typically use "oraclelinux:7-slim", which doesn't come with basic commands like unzip, tar and gzip. It's tempting to install "all the usual stuff" using Yum, but you should try to keep things as light as possible. Your aim should be to install the minimum amount of software to get the application running and no more. This can be quite challenging at first.

Never Connect to Your Container

I've heard it said a few times, if you have to connect to your container, you've failed. You will obviously be exposing ports to the outside world so people can connect to the application server or database running inside the container. The "never connect" is more to do with things like SSH connections. By default you don't get any SSH access to a container, and your shouldn't enable it. Interaction with the container should be strictly limited, so you don't get tempted to break the "Don't upgrade. Rebuild" rule. If you allow too much access to the container, it's easy to break the rules and cause problems during the next rebuild cycle. If you spot a problem that needs a configuration change, that should prompt a revision of the build, not a quick fix.

Never Do Manual Installations or Configuration

You should never need to manually install or configure software in your container. This is implied by the previous point, because you would have to connect to do such installations and configuration. If your image requires you to run commands externally to complete the installation or configuration, you probably need to revise your build process. If everything is done properly, you should only have to run, stop, start and remove containers to achieve your desired result. Anything else means you still have the possibility for human error, which means the process is not guaranteed to be repeatable everywhere.

Don't Upgrade. Rebuild

Once you have a running container, you should avoid making additional configuration changes to it, which includes upgrades and patches of applications and databases. Instead you should make the necessary changes in the Dockerfile and/or configuration scripts, rebuild the image, then drop and run a new container based on the updated image. This approach is quite foreign to most DBAs, who are used to applying patches and upgrades on the existing kit. It also presents some interesting challenges as far as Oracle database upgrades and patches are concerned.

With this in mind it is important to remember you are trying to make containers that are stateless. Anything important that shouldn't be throw away when the container is dropped and run again, like your data files, should be held in persistent storage, not in the container itself. You can read about persistent storage here.

Never Run Apps as Root

When you are using physical or virtual kit you very rarely run software as the root user. In contrast people often feel safe to take the lazy approach and run applications (application servers and databases) as the root user inside a Docker container, believing that Docker somehow protects them from all the possible problems associated with this bad practise. It doesn't! You need to follow similar hardening practices for software running in Docker containers as you would with virtual machines or physical kit. This includes not running applications as the root user.

The Kernel is Shared

All containers share the same kernel, which has some interesting side effects. For one, it means containers are inherently less secure than VMs as there is less isolation between containers than there is between VMs. It also means kernel parameter settings have to be set in the host OS and must be suitable for all containers on the host. This is an important consideration when you have applications that require specific kernel parameter settings, such as Oracle databases.

In an attempt to solve some of these issues products like VMware launch each container in its own VM, using a small footprint Linux distribution, which results in the same level of isolation as a VM, while retaining the feel of Docker.

Can You Trust Pre-Defined Images?

Many companies provide pre-defined images, which save you from having to write your own Dockerfiles and build your own images. That's a great time saver, but you must do some due diligence before trusting an image. You need to make sure the person/company has done a good job building the image, following the appropriate best practices for Docker and the application software installed in the image.

Docker for Oracle Databases

You will see examples of running Oracle databases in Docker. It works fine and is now supported on the newer database releases. Despite this, I don't see myself running something as big and complicated as an Oracle database in a Docker container for production any time soon. Here are some things to consider.

- I've mentioned previously about the cut-down nature of the OS. This can make diagnosing even basic issues quite difficult. Many of the monitoring agents and tools you are used to may not be present within the container.

- Patching and upgrades of Oracle databases are problematic on Docker if you are trying to stay true to the docker way of doing things. I've written about there here.

- Databases are much bigger than most applications and application servers. Using a common image for database software will save space, but the datafiles are unique, so the potential space savings are less relevant for databases on Docker. Remember, the database lifecycle is very different to that of an application server. As a result the monolith databases don’t fit into the build and burn approach favoured by Docker.

- Most of the CPU and memory for a database server is used by the Oracle instance(s), not the OS, so the additional overhead of using separate VMs compared to multiple containers on one VM is not significant for all but the smallest of databases.

- There are some questions about the level of isolation provided by Docker, so from a security perspective you may prefer not to use it for your databases.

- If you require SSH access to your "database servers", you can't really do this in the same way with your Docker containers. You can't SSH directly to them. Instead someone must connect to the main server, and then connect to the container. This may be a security risk for anyone other than privileged users.

- Oracle database high availability (HA) products are complicated, often involving the coordination of multiple machines/containers and multiple networks. Real Application Clusters (RAC) and Data Guard don’t make sense in the Docker world. In my opinion Oracle database HA is better done without Docker, but remember not every database has the same requirements.

- Although Docker can limit resource usage (described here), Docker can’t be used to limit Oracle licensing. You have to license all cores on the host running the container. This makes mixed workloads problematic from a cost perspective. Only for those with ULAs, or carefully planned infrastructure can consider Docker for Oracle workloads.

At this point in time I would probably only consider running Oracle databases in Docker for the learning experience, demos, short-lived dev/test environments and maybe some QA type scenarios. I can't see Oracle on Docker for production being considered normal for some time, if ever.

My experience of using MySQL in Docker is rather limited, but it does feel more of a possibility. It's more lightweight, is much simpler to patch and fits better with the rebuild and run again philosophy, provided you handle the persistent storage properly.

Where docker shines is in the application layer, which is really why it came into being in the first place. Docker is great for building single purpose application servers, such as Apache HTTP and Tomcat servers. It's really interesting for the development pipeline, allowing you to easily fire up new instances of your application in multiple environments, which is great for continuous integration. For those working with microservice architecture I guess Docker is mandatory.

I've discussed this topic more in this blog post.

Purest or Not?

When you start using Docker for the first time, I would suggest trying to follow the purists approach until you get enough experience to understand where, if at all, it is causing you a problem.

It's easy to get locked into a purest mindset when reading the documentation, blogs and stackoverflow, but remember it is up to you how you want to use this technology. It is OK if you choose to use Docker as lightweight virtualization. It is OK if you choose to make massive images with all the OS packages installed. It's OK if you choose to upgrade your Oracle database in an existing container, rather than doing a rebuild of the image. This is not the way Docker was intended to be used, and if you are in this mindset, you would be better sticking with virtualization, but that doesn't mean Docker can't be used that way.

For more information see:

Hope this helps. Regards Tim...