8i | 9i | 10g | 11g | 12c | 13c | 18c | 19c | 21c | 23ai | Misc | PL/SQL | SQL | RAC | WebLogic | Linux

Automation Patterns for Typical DBA Tasks

This post describes some common automation patterns I use when automating typical DBA tasks. Not surprisingly, they look very much like the manual processes they replace.

Tools

There are some general tools we will use as we build up our automations. The specific tools are not that important. The important point is we have a tool that serves each role.

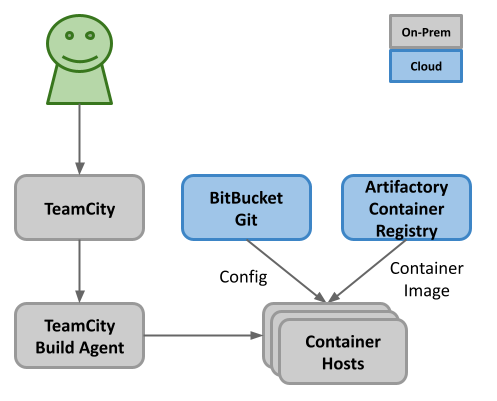

- Version Control System (VCS): All our builds and deploys should be driven by some form of scripting, which invariably means we need these scripts stored in a version control system. Git is an extremely popular version control system. We use BitBucket for our cloud-based git repositories, but any other cloud or on-prem VCS is fine. In future we may be moving to GitHub Enterprise.

- Automation Server: We need something to trigger builds and deployments. The trigger could be a Git commit, or a button press. We currently use TeamCity as our automation server, but we could easily switch to something like Jenkins, GitHub Actions or GitLab CI for example.

- Software Repository: We need somewhere to hold the software for our builds and patches. We use an on-prem installation of Artifactory for this, but it could be something as simple as a HTTP server or an SFTP server. We've stayed with on-prem for our software repository, as it reduces the time needed to download software to the servers.

- Container Registry: If you are using containers you will need a container registry. We want to build an image once and use that same image for all containers of the same type. The simplest way to do this is to build it and push it to a container registry. We use JFrog Platform (Cloud-Based Artifactory) for our container registry. It does add some latency for image push and pull operations, but the container images are small and we mostly update for patching purposes, so it works out fine.

I can't stress enough, the specific tool selection is not important. It's the role the tool plays that is important.

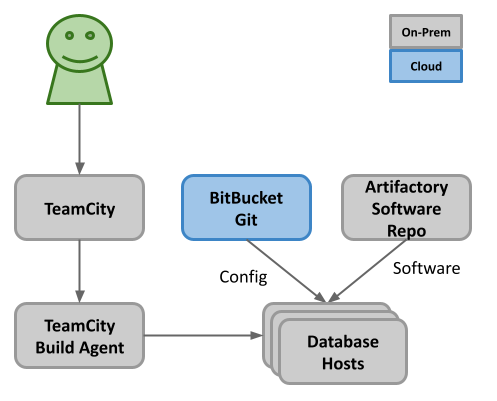

Database Patches

Over the last few years we've managed to standardise our database deployments, such that patching is identical across the all our Oracle databases.

The top-level process is as follows.

- When the quarterly Oracle security patches become available, we download them along with the latest OPatch and upload them to Artifactory, our software repository.

- We alter the config file in Git, which specifies the new patch details.

- During the appropriate patching window we trigger the patch in TeamCity, which initiates the patch of a specific database, or group of databases.

The patching process is as follows.

- The first operation is a "git pull" on the database server(s), to make sure it has the latest config files and patch script.

- The patch script downloads the relevant software from Artifactory onto the local server, applies the patches and deletes the software on completion.

You can read more about database patching here.

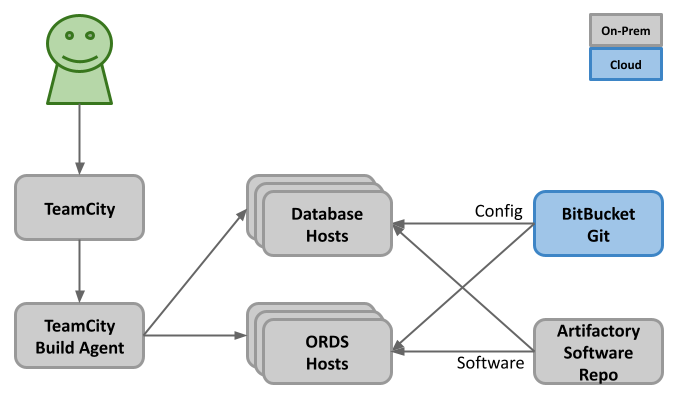

APEX Patches

APEX patching is relatively simple, but it is awkward making sure the static files (image, CSS etc) are distributed to all the middle tier servers.

The top-level process is as follows.

- When an APEX patch becomes available, we download it and upload it to Artifactory, our software repository.

- The APEX patches for a specific version of APEX always have the same file name, so we don't have to alter any config files in Git.

- During the appropriate patching window we trigger the patch in TeamCity, which initiates the APEX patch of a specific database, or group of databases, and deploys the static files to the relevant middle tier servers.

We use ORDS running in containers as our PL/SQL gateway, which also presents the APEX static files. We keep the static files on the host servers, and present them to the containers, which allows us to alter the static images without redeploying the containers, making the delivery of the static images much simpler.

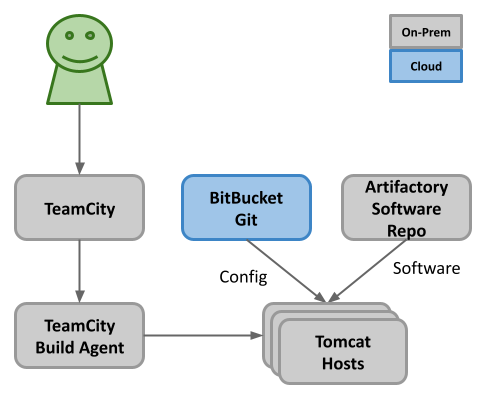

Tomcat Patches

We try to use containers for our middle tiers where possible, but we have some 3rd party products that are not supported in containers, so we have to do more traditional patching. We have a lot of Tomcat servers, so although Tomcat patching is simple, it's quite labour intensive to manually patch all the servers in an environment.

The top-level process is as follows.

- When the Tomcat and/or OpenJDK patches become available, we download them and upload them to Artifactory, our software repository.

- We alter the config file in Git, which specifies the new Tomcat and Java version details.

- During the appropriate patching window we trigger the patch in TeamCity, which initiates the Tomcat patch of a specific server, or group of servers.

The patching process is as follows.

- The first operation is a "git pull" on the tomcat server(s), to make sure it has the latest config files and patch script.

- The patch script downloads the relevant software from Artifactory onto the local server, stops Tomcat, unzips the Tomcat and Java software, alters symbolic links to point to the new software versions, starts Tomcat and cleans up the software.

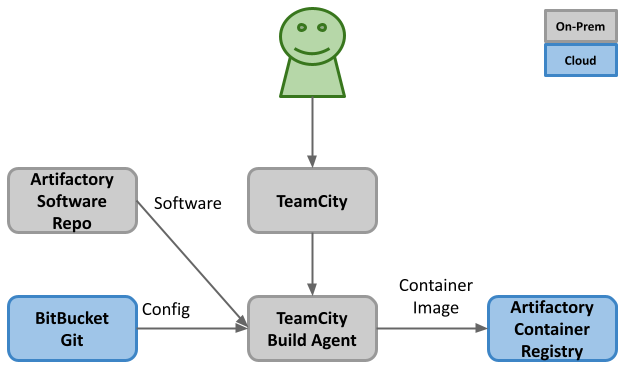

Container Builds and Deploys

We have several container images for different services, but the process of building them is similar.

The top-level image build process looks like this.

- When new base software becomes available, we download it and upload it to Artifactory, our software repository. In all cases it will include the base container OS, but the additional software will vary depending on the service. On some images we only add Java. On others we add Tomcat and Java. For our ORDS container images we add Java, Tomcat, ORDS and SQLcl.

- We alter the config file in Git, which specifies the new software version details.

- As soon as the previous steps are complete we trigger the build of the new image(s) in TeamCity. The build process creates the new image and pushes it to our container registry.

Remember, the build of the image is separate to the deploy of a container using this image, so the builds are completed before any deployments happen.

Deployments of containers can vary a little. In some cases the DBAs are in control of them, so we treat them like a traditional patching window. In some cases the developers are in control of them, so they pull the latest container image as part of their application deployment. Either way, TeamCity is the trigger.

The top-level deployment process looks like this.

- The container deployment is triggered in TeamCity. This may be manually triggered by a DBA in a security patching window, or triggered by a developer commit when deploying a new version of the application. Either way it is initiated by TeamCity.

- The deployment script pulls the latest version of the container image from our container registry, destroys the existing container, and runs a new container based on the new image.

The container deployments are really simple, because we never amend existing containers. They are removed and recreated.

Considerations

Here are some things to consider.

- The tools are irrelevant. It's the mindset that matters.

- Many of the DBA tasks we have automated have been scripted for years. We've just used the automation server (TeamCity in this case) to thread them together. Automation doesn't have to mean scrapping and replacing all your existing work.

- Most of the DBA tasks I've included here use shell scripting running on the servers. For less DBA-focused automations we use Terraform, Ansible and Python. As I keep saying, the tools are irrelevant. Use what works for you.

- These patterns are based on processes we've been doing for years. I'm not suggesting these are "the best" way to achieve automation. They just give you an overview of what we are doing for our DBA-focused tasks. Hopefully this will give you some inspiration if you want to build your own.

For more information see:

Hope this helps. Regards Tim...