8i | 9i | 10g | 11g | 12c | 13c | 18c | 19c | 21c | 23ai | Misc | PL/SQL | SQL | RAC | WebLogic | Linux

Oracle Application Express (APEX) Production Environment Topology

This article gives an example of the topology necessary to deploy an APEX application safely in a production environment.

- Which gateway should I use for APEX?

- How should I deploy ORDS?

- Do you give direct access to ORDS on the application server?

- What do we do?

- What about high availability?

Related articles.

Which gateway should I use for APEX?

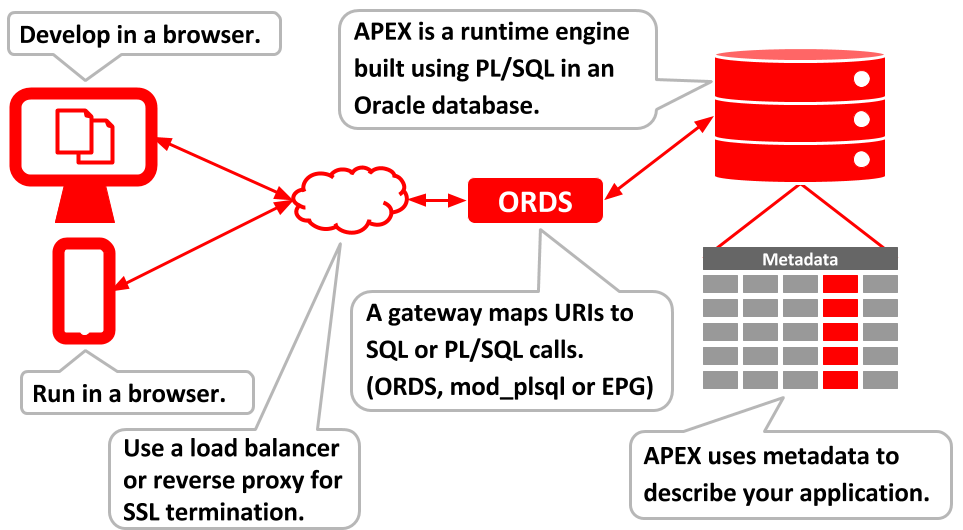

APEX runs in the database, but it needs a gateway to allow URLs to be mapped to database calls. You will often see diagrams such as the following to explain how things fit together.

There are a number of gateways we can choose from, but which should we pick?

- Oracle REST Data Services (ORDS) : Yes!

- Embedded PL/SQL Gateway (EPG) : No! This is built into the database and can be used for demos, but I would advise against using it directly even for demos.

- Oracle HTTP Server (OHS) and mod_plsql : No! This used to be the standard way to front any PL/SQL web toolkit applications, including APEX. The latest versions of OHS no longer support mod_plsql, so this is not a good choice going forwards.

- Apache and mod_owa : I've never used this. You can read more about it here.

How do I deploy ORDS?

There are a number of ways to deploy ORDS, but which should we pick?

- Deployed on Tomcat : This is the method I use. It's really simple to do it, and Tomcat comes with a whole bunch of options that make sense for production deployments. You can read about ORDS on Tomcat here.

- Standalone Mode : This is supported for production, and this is the way Oracle run ORDS in the cloud. If standalone mode ticks all your boxes, feel free to use it. You can read more about standalone mode here.

- Deployed on WebLogic : If you already have WebLogic and you really don't want to use Tomcat or standalone mode, fine, but it feels like using a sledgehammer to crack a walnut. The Oracle stance is, "Use WebLogic if you need integration with Enterprise SSO solutions".

Do you give direct access to ORDS on the application server?

I would never recommend giving direct access to any application server, and I prefer not to give direct access to regular web servers either. Instead I would suggest fronting everything with a load balancer or a reverse proxy (using Apache or NGINX). Typically we use a load balancer. Using this extra layer is useful because of the following reasons.

- You can keep your real certificates (from a certificate authority (CA)) in one location, and do all your SSL termination at this layer.

- The load balancer or proxy can sit in a separate network zone to the other servers, adding an extra layer of separation from the important stuff.

- The load balancer acting as a reverse proxy, or a regular reverse proxy, can allow you to use nice URLs, rather than having to access machines on specific ports. For example, you might proxy this, "https://my-application.example.com", to this, "https://my-server.example.com:8443".

- APEX URLs are not the friendliest to give people. You can setup neat redirects in this layer, that look more presentable in an email, and bounce people across to the real location. It's just a convenient jump-off point.

- It can add an extra layer of security by preventing cross-site scripting. A specific host "my-application.example.com" can be locked down to a specific URI "/f?p=100" if needed.

What do we do?

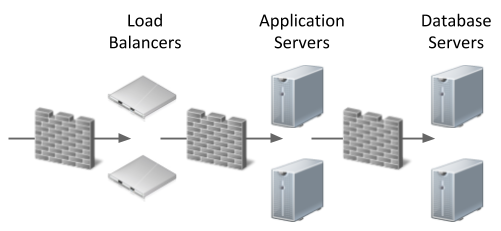

I thought it might be good to give a real example of what we do in my current company. If you are not used to using all the pieces it can seem a bit daunting, but it's actually pretty simple. Your preferred solution will depend on your requirements, knowledge and company policies. We follow the same pattern whether we are using ORDS to front APEX applications, or just to deliver web services.

- Load Balancers : We have load balancers (F5 Big IP) that act as a proxy and also provides SSL Termination. All our real certificates are in the load balancer. It then re-encrypts traffic to the Tomcat+ORDS servers using self-signed certificates, because it's internal-only traffic at this point. The load balancers are in a "web zone" of the network controlled using a firewall. This means a specific alias is DNSed to a specific VIP on the load balancers, which is routed to the relevant pool of servers. Comms from the load balancer use a specific SNAT per service (sometimes groups of services), which can talk to a specific port on the destination server(s). There is no cross-site-scripting possible and it can even lock down a host in the URL to a specific APEX application if necessary.

- ORDS : Each functional area, roughly one per database, has its own Tomcat+ORDS installation running inside a container, with each container exposing 8443 to a different physical port on the host server. The servers hosting containers sit in an "application server" network zone. A firewall provides point-to-point rules between network zones, and we also use the local firewall on the servers that host the containers. ORDS is pretty lightweight, so we can pile a bunch of these containers on each server. You can read more about ORDS in containers here. We use Oracle Linux for all our servers, including the Docker and Podman servers.

- Database : Connections from ORDS to the database use Native Network Encryption. The databases are kept in a separate "database zone" of the network. A firewall provides point-to-point rules between network zones, and we also use the local firewall on the database servers.

The overall picture looks a little like this.

The net result, assuming we've not made mistakes, is that any specific alias "my-app.example.com" can be made available externally, campus/company only or available to individuals without allowing access to other services.

What about high availability?

Please read this before continuing.

APEX is a runtime engine inside the database, so it is only as available as the database itself. There are a number of ways to improve the availability of an Oracle database including the use of RAC and/or Data Guard. Your choice may depend on a number of factors, including cost and staff skills.

Making ORDS more highly available simply requires additional nodes (Servers, VMs or Containers) behind your load balancer.

Regarding the load balancer, clearly this can't be a single service. It must be highly available itself. How that is achieved depends on the technology being used.

For more information see:

Hope this helps. Regards Tim...