Here’s a little story about how things are all the same but different…

Software Sprawl

Let’s cast our minds back to the bad old days, where x86 machines were so underpowered, the thought of using them for a server was almost laughable. In those days the only option for something serious was to use UNIX on kit from one of the “Big Iron” vendors.

The problem was they were very expensive, so we ended up having loads of software installations on a single box. In some cases we would have many versions of Oracle installed on a single machine, running databases of many different versions. In some cases those same machines also ran middle tier software too.

It may have been a small number of servers, but it was a software sprawl. To try and add some isolation to the sprawl we may have resorted to things like LPARs or Zones, but we often didn’t.

Physical Server Sprawl

Fast forward a few years and x86 kit became a viable alternative to big iron. In some cases a much better alternative. We replaced our big iron with many smaller machines. This gave us better isolation between services and cleaned up our software sprawl, but now we had a physical server sprawl, made up of loads of underutilized servers. We desperately needed some way to consolidate services to get better utilization of our kit, but keep the isolation we craved.

Virtual Machine (VM) Sprawl

Along comes virtualization to save the day. Clusters of x86 kit running loads of VMs, where each VM served a specific purpose. This gave us the isolation we desired, but allowed us to consolidate and reduce the number of idle servers. Unfortunately the number of VMs grew rapidly. People would fire up a new VM for some piddling little task, and forget that operating system and software licenses were a thing, and each virtualized OS came with an overhead.

Before we knew it we had invented VM sprawl. If ten VMs are good, twenty must be better, and put all of them on a physical host with 2 CPUs and one hard disk, because that’s going to work just fine! 🙁

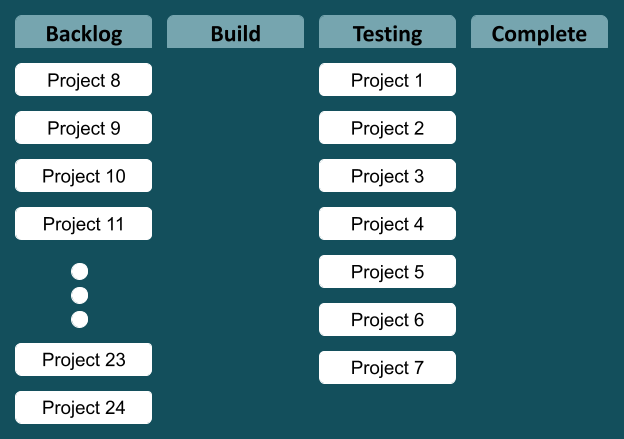

Container Sprawl

Eventually we noticed the overhead of VMs was too great, so we switched to containers, which had a much lower overhead. That worked fine, working almost like lightweight virtualization, but it wasn’t special enough, so we had to make sure each container did as little as possible. That way we would need 50 of them working together to push out a little “Hello World” app. Managing all those containers was hard work, so we had to introduce new tools to cope with deploying, scaling and managing containerised applications. These tools came with there own overhead of extra containers and complexity.

We patted ourselves on the back, but without knowing it we had invented container sprawl, which was far more complicated than anything we had seen before.

Cloud Sprawl

Managing VM and container sprawl ourselves became too much of a pain. Added to that the limits of our physical kit were a problem. We couldn’t always fire up what we needed, when we needed it. In came the cloud to rescue us!

All of a sudden we had limitless resources at our fingertips, and management tools to allow us to quickly fire up new environments for developers to work in. Unfortunately, it was a bit too easy to fire up new things, and the myriad of environments built for every developer ended up costing a lot of money to run. We had invented cloud sprawl. We had to create some form of governance to control the cloud sprawl, so it didn’t bankrupt our companies…

What next?

I’m not sure what the next step is, but I’m pretty sure it will result in a new form of sprawl… 🙂

Cheers

Tim…

PS. I know there was a world before UNIX.

PPS. This is just a fun little rant. Don’t take things too seriously!