Someone on YouTube asked me some general questions about my experience of Vagrant, so I thought I would write them down as a blog post.

Could you share the story of when and how you first encountered Vagrant, and how did you feel about it at the time?

I was quite late to the party. In 2017 I was at a VMware workshop in Cork, Ireland. I was sitting in the hotel and Frits Hoogland was showing me his Vagrant build for a test Oracle database. Like most things when they are unfamiliar, it seemed a little complex. He gave me access to his Vagrant repository, but I hardly looked at it. It was on my list of things to do, but there is always so much on my to-do list. When Frits talks you should listen, but unfortunately I failed that mission. 🙂

About a year later a colleague at work asked me what Vagrant was, and I struggled to give a reasonable answer. That evening I Googled it, and tried a couple of really simple builds. As someone with lots of VMs at home it totally blew my mind. From that point on I was hooked. I wrote Vagrant builds for all my test databases, so I could rebuild them whenever I wanted to. I went from never using it, to never shutting up about it overnight.

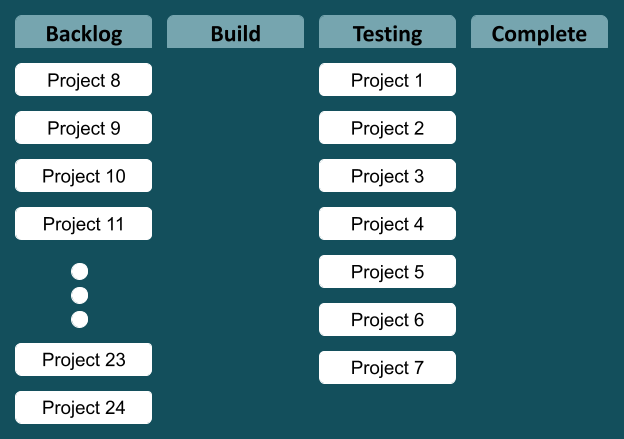

Now my PC is a lot less bloated. I don’t have to keep loads of VMs for different database versions, RAC and Data Guard etc. If I need something to do a test I just build it from scratch.

Another benefit is it makes live demos feel a lot less stressful. I remember being in my hotel in India, and a few minutes before I was due to start presenting I was having some issues with my demo VM. I just typed “vagrant destroy -f” followed by “vagrant up” and my demo system was rebuilt and I was good to go. Nightmare averted, and no need for loads of backups and snapshots.

How long does it usually takes for you to create the builds that are used to create the database?

The first couple of real builds took some time as there was a learning curve with Vagrant. Fortunately I have a lot of Oracle skills and some basic system administration skills, so it wasn’t too bad. It would have been a lot harder if I was trying to pick up several new skills at the same time.

All my Vagrant builds are based on articles, so I already know what to do. I’m basically tweaking the instructions from my articles to form the Vagrant builds.

The more vagrant builds you do, the quicker you get at doing them, because you have a repository of previous builds to pull ideas from. I’m now at the point where most new builds are slight variations of a previous build, so they are really quick to write.

How are you using Vagrant at work? I assume that some companies will require us to install the database with its options in a bare metal server, and not with VMs.

I use Vagrant at home. All my writing is based on VMs I’m running at home. Those VMs are built using Vagrant.

Automation at work is a little tricky because there is a separation of duties between virtualization, system administration and DBA teams. Configuring a complete automation is quite time consuming and political. Terraform and Ansible are more commonly used at work, but we are still on that journey. We are less DevOps and more DevHopeful. 🙂

Our cloud automations all use Terraform. Terraform is similar to Vagrant, as both are produced by Hashicorp.

The tools you use for automation are not as important as the attitude. Once you get into automation you can switch between tools a lot more easily, because you understand the approach you need to take.

How does using Vagrant help you in serving your customers?

Using Vagrant at home makes it quick to set up new environments, which allows me to learn new stuff faster. I don’t like doing anything at work unless I’ve already tried it at home. I guess me knowing more helps me do my job better, which ultimately benefits the people depending on me at work, so I guess there is an indirect relationship. 🙂

How do you learn Vagrant?

If you want to know more about Vagrant you can start here.

I find the easiest way to learn about Vagrant is to build things. There are loads of builds on the internet to use for inspiration. You can find mine here.

Cheers

Tim…