You’ve probably heard that Oracle have made some training and certifications free in recent times (here). We are approaching the end of that period now. Only about 15 more days to go.

Initially I thought I might try and do all the certifications, but other factors got in the way, so I just decided to do one. You can probably guess which one by the title of this post. 🙂

I had seen a few people speaking about their experiences of the training videos, so I thought I would give my opinions. Remember, this is my opinion of the training materials and exam, not my opinion of the cloud services themselves. I am also aware that this was free, so my judgement is going to be different than if I had to pay for it.

The Voices in the Videos

A number of people have been critical about the voices on the training videos. I really didn’t see a problem with them.

When you record videos and do presentations you have to decide who your target audience is. A large number of people that use Oracle have English as a second language. Having spent years presenting around the world I’ve learned you have to slow down a bit, or you lose some of the audience. I do this on my YouTube videos, and it can make them sound a bit monotone at times. When I’ve recorded my videos at my normal talking speed, people have responded to say they were brutally fast. You can’t please everyone. You have to make a choice, and for some professional training materials that probably means speaking slower.

I listened to most of these training videos at 1.5 speed and it was fine. The fact I wanted to listen to it this way is not a criticism of the training. I’ve listened to a number of Pluralsite courses at 1.7 speed, and I tend to listen to non-fiction Audible books on a higher speed. You just have to find what works for you.

It’s just my opinion, but I thought the voice was fine.

Content Inconsistencies

There are inconsistencies between the training materials and the documentation. I originally listed some, but I don’t think it’s really helpful. As with any training material, I think it’s worth going through the training material and documentation at the same time and cross referencing them, as well as trying stuff out if you can. It helps you to learn and it makes sure you really know what you are talking about.

Why are there inconsistencies? I suspect it’s because the cloud services have changed since the training materials were recorded. Remember, there is a quarterly push to the cloud, so every three months things might look or act a little different.

What should you do? I would suggest you learn both the training material, and the reality where the two diverge, but assume the training material is correct for the purpose of the exam, even if you know it to be wrong in reality. This is what I’ve done for all previous certifications, so this is nothing new to me.

How did I prepare?

As mentioned above, I watched the videos at 1.5 speed. For any points that were new to me, or I had suspicions about the accuracy, I checked the docs and my own articles on the subject. I also logged into the ADW and ATP services I’m running on the Free Tier to check some things out.

I did the whole of this preparation on Sunday, but remember I’ve been using ADW and ATP on and off since they were released. If these are new to you, you may want to take a little longer. I attempted to book the exam for Monday morning, but the first date I could get was late Wednesday.

Content

The training content is OK, but it contains things that are not specific to Autonomous Database. Sure, they are features that can be used inside, or alongside ADB, but I would suggest they are not really relevant to this training.

Why? I think it’s padding. Cloud services should be easy to use and intuitive, so in many cases I don’t think they should need training and certification. They should lead you down the right path and warn of impending doom. If the docs are clear and accurate, you can always dig a little deeper there.

This certification is not about being a DBA or developer. It’s about using the ADB services. I don’t think there is that much to know about most cloud services, and what really matters goes far beyond the scope of online training and certifications IMHO. 🙂

Free

The training and certifications are free until the middle of May 2020, which is when the new 2020 syllabus and certifications for some of the content comes out. By passing this free certification you are passing the 2019 certification, and they will stay valid for 18 months, then you will have to re-certify or stop using the title. I guess it’s up to you whether you feel a pressing need to re-certify or not.

Update: Some of the other training and exams are already based on the 2020 syllabus. Thanks for Adrian Png for pointing this out. 🙂

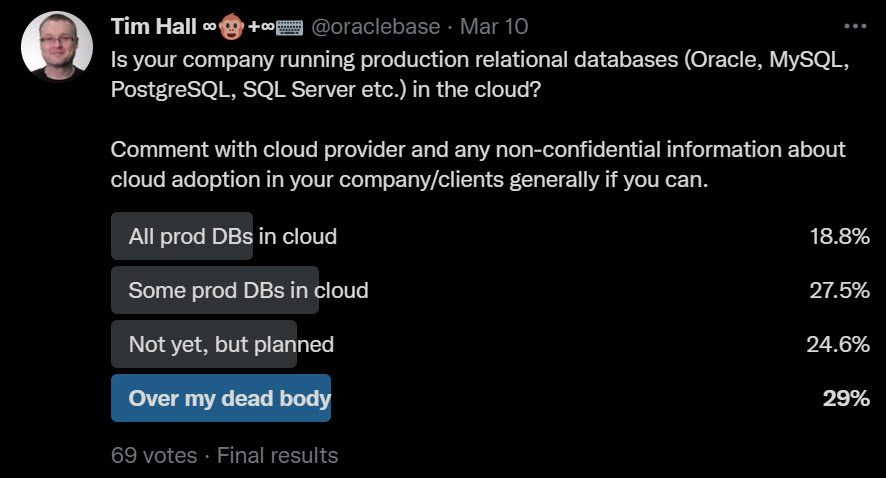

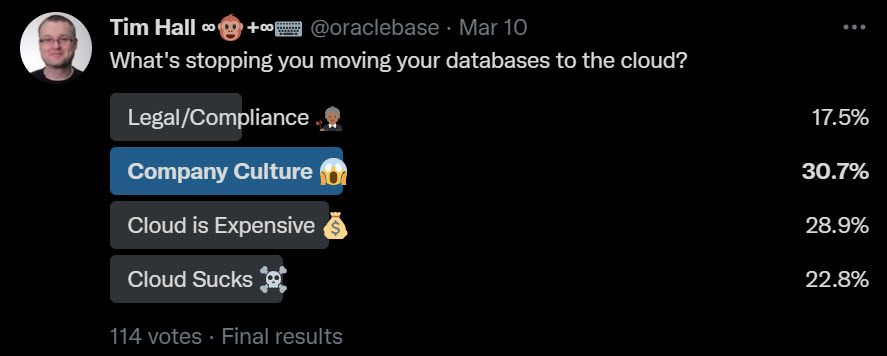

I’m sure this would not be popular at Oracle, but I would suggest they keep the cloud training and certifications free forever. Let’s be honest. Oracle are a bit-player in the cloud market. They need all the help they can get to win hearts and minds. Making the cloud training and certification free forever may help to draw people in. I don’t see this type of material as a revenue stream, but I’m sure some folks at Oracle do.

From what I’ve seen, the training materials are entry level, and something I would encourage people to watch before using the services, so why not make them free? That’s rhetorical. I know the answer. 🙂

Would I pay for it?

No. I watched the material to get a feel for what they included. I’m not saying I already knew everything, because I didn’t, but I knew most of what I wanted to know before using this stuff. Of course, if I had come in clean, this would have been pretty helpful I guess, but I think it would have been just as easy for me to use some of the online docs, blog posts and tutorials to get to grips with things. That’s just my opinion though. Other people may feel differently.

Would I have sat the exam if I had to pay for it? No. I don’t think there is anything here that I wouldn’t expect someone to pick up during their first few hours of working with the service. It’s nice that it’s free, but I’m not sure it makes sense to pay for it.

What about the exam?

The exam just proves you have watched the videos and have paid attention. If someone came into my office and said, “Don’t worry, I’m an Oracle Autonomous Database Cloud 2019 Specialist. Everything is going to be OK!”, I would probably lead them to the door…

I don’t think the exam was so much hard, as confusing at times. There were some questions I think need revision, but maybe I’m wrong. 🙂

What about doing the exam online?

This freaked me out a bit. You have to take photos of yourself at your desk, and photos of the room. Somewhere at Pearson Vue they have photos of my washing hanging up. 🙂 You are told not to touch your face, so as soon as I heard that my whole head started to itch. I started to read the first question out loud, and was told I had to sit in silence. I understand all the precautions, and they are fine. It just felt a bit odd. 🙂

So there you have it. Having promised myself I would never certify again, it turns out I’m a liar… 🙂 If you get a chance, give one of the training courses and exams a go. You’ve got nothing to lose. You can read more here.

Cheers

Tim…