I was reading about Amazon EKS Distro (EKS-D) and EKS Anywhere. For those of you not in the know, EKS stands for Elastic Kubernetes Service. What’s interesting about these new announcements is they will allow people to run EKS in Amazon AWS, on-prem and potentially in other cloud providers (Google, Azure, Oracle etc.). That got me thinking…

What if Oracle created “Autonomous Database Anywhere”? The suite of software that makes up the Autonomous Database, but packaged in a way it could be installed on-prem, but more importantly at other cloud vendors.

I happen to like the Autonomous Database product(s). It’s the start of a long journey, but I like the way it is headed. There are a couple of hurdles I see for companies.

- Learning to live with the restrictions of working in an Autonomous Database. When you want something done automatically, you have to give up an element of control and learn not to meddle too much.

- Some companies already have a big investment in “other clouds”, and won’t be jumping to Oracle Cloud any time soon.

I see the second point as a bigger stumbling block for a lot of people. Don’t get me wrong, I see the future as multi-cloud, but many companies are still in the early days of cloud adoption, and trying to jump to multi-cloud from day one may be a step to far.

I know Oracle provide alternative solutions like Cloud@Customer and Azure OCI Interconnect. Both are fine for what they set out to achieve, but neither really live up to the “Autonomous Database Anywhere” I was thinking about.

I understand the difficulties of this from a hardware, architectural, cultural and political standpoint. I just thought it was an interesting idea. Such is the way my mind wanders these days… 🙂

Cheers

Tim…

PS. I’m not expecting Oracle to even consider this. It was just a thought… 🙂

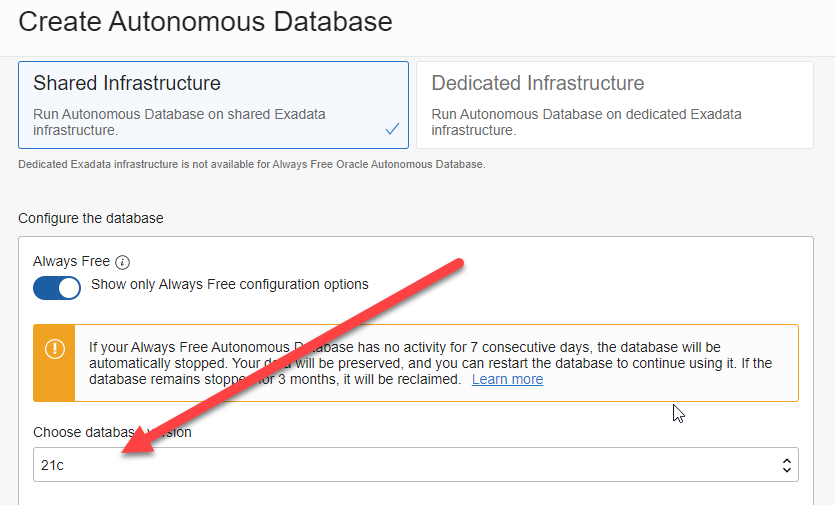

PPS. Oracle just released Oracle 21C Autonomous Database. Weird coincidence.

I signed up to a hands-on lab for the Autonomous Data Warehouse Cloud Service. These are the notes I took during the lab. They are a little scrappy, but I think you will get the idea…

I signed up to a hands-on lab for the Autonomous Data Warehouse Cloud Service. These are the notes I took during the lab. They are a little scrappy, but I think you will get the idea…