In case you didn’t know, countries occasionally change their time zones, or alter the way they handle daylight saving time (DST). To let the database know about these changes we have to apply a new database time zone file. The updated files have been shipped with upgrades and patches since 11gR2, but applying them to the database has always been a manual operation.

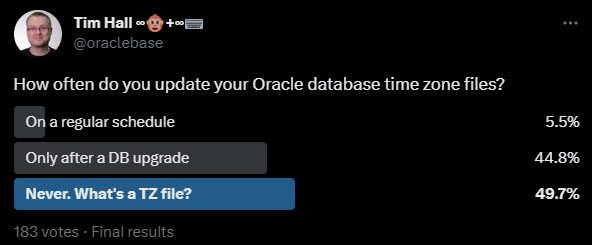

With the recent switch over to daylight savings time in the UK I decided to post this question on Twitter yesterday.

We get less than 6% of people updating their time zone files on a regular schedule. Nearly 45% who only do the updates after a database upgrade, and nearly 50% of people who never do it at all.

I can’t say I’m surprised by the results. In terms of the reasoning for these responses, I’ll reference some of the comments on Twitter.

Regular Schedule

“Every ru patch, also thanks to 19.18 it is included now and with out of place upgrade and autoupgrade, i dont do it anymore 🙂 all automatic.”

Mustafa KALAYCI

If you are using AutoUpgrade to patch to a new Oracle Home, then applying updated time zone files is really easy. Before 19.18 it’s just a single entry “timezone_upg=yes” in the AutoUpgrade config file. From 19.18 onward the update of the time zone file is the default action (see here).

So interestingly, there may be some people who don’t know they are applying an update of their time zone file, who actually are now…

After Upgrades

This feels like the natural time to do it for me, and it seems many other people feel the same.

As mentioned previously, AutoUpgrade makes it simple. From 21c onward AutoUpgrade is the main upgrade approach, even for those that have resisted using it for previous versions, so this question goes away from an upgrade perspective.

We can specifically tell it not to perform the action using “timezone_upg=no”, but I’m guessing most people will just go with the default action.

Never

“NEVER. As an American-only company with very little need for time-specific data, quite unnecessary. Horrible design with no rollbacks and headaches w/data pump. Just not worth it if possible to avoid”

Taylor

I totally understand this response. Many of us work with systems that are limited to our own country. Assuming our country doesn’t alter its own daylight savings time rules, then using an old time zone file is unlikely to cause an issue.

When you consider the number of people that run *very old* versions of Oracle, you can see that using old versions of the time zone file doesn’t present a major issue in these circumstances.

With reference to the data pump issue, I’ve experienced this, and it was also picked up in the comments.

“My hypothesis: Most do it when datapump tells they need to do it to get the import file they just received to load”

Connor McDonald

Offline/Online Operation

The point about this being an offline operation was raised.

“Well it is an offline operation, so pretty exceptional thing to do. Only in a rare case where some feature requires the upgrade – like DataPump failing or query over dblink failing.”

Ilmar Kerm

Downtime is never welcome, but it was also pointed out it can be an online operation in 21c.

“Offline will be a thing of the past…

Connor McDonald

Conclusion

It seems like the time zone file version is not high on the list of priorities for most people, providing it is not causing a data pump issue. I totally understand this, and I myself only consider it during database upgrades.

I always like reading these poll results. I know the sample size is small, but it gives you a good idea of how your beliefs compare to the wider audience.

If you are interested to know how to manually upgrade your time zone file, you can read about it here.

Cheers

Tim…